title: MACHINE CODE

projekt: zer0

author: Borislav Nikolov

About this book

I am writing this for my daughter. It is what I would like to teach her, maybe some of it will be useful to you as well.

I want to teach you what it means to compute and what really is a program.

What is the electricity of the if? Why does if even need electricity?

From electrons and wires, to machine code, to neural networks. Making your own computer, your own machine language, and your own language, train your neural network on pen and paper.

But most importantly, I hope to teach you to be curious and kind.

How does one do impossible things? Like making a computer from wires, when they know little about wires or computers? To do an impossible thing you need to either not know that it is impossible, or, not care if it is.

Ignorance, curiosity and kindness.

I am writing this as we have entered changing times - excitement and fear are in the air. Maybe we create the next evolution of intelligence, maybe it helps us solve all our problems, or maybe it destroys or enslaves us; maybe it's fake and doesn't do anything at all.

Time will tell.

Whatever the outcome, nothing can stop us from creating, reading and writing anything we want, one word at a time, one symbol at a time.

Now more than ever, when the internet is dead.

NB: This is the book before the book, I will rewrite it, hopefully 3 times.

All that is gold does not glitter,

Not all those who wander are lost;

The old that is strong does not wither,

Deep roots are not reached by the frost.-- J.R.R. Tolkien, The Fellowship of the Ring

Symbols

Since we are born, and even before that, we interact with the world through collision and violence. For me to live, something must die, be it a plant or an animal. For me to stand, the floor must push me. For me to see, light must crash into my eyes. For me to speak, I must shape the air. And yet, on the inside, we live in a dream, from the violence we create a world, a universe, in our mind. Our mind projects the reality inside of itself. And since each of our minds is uniquely shaped by violence, I can only interact with you through symbols. Symbolic language is hundreds of thousands years old, and it is possibly our greatest creation.

In this chapter I will try to explain what are symbols, how they transform and

evolve, how does it feel to do symbolic execution, and what is computation.

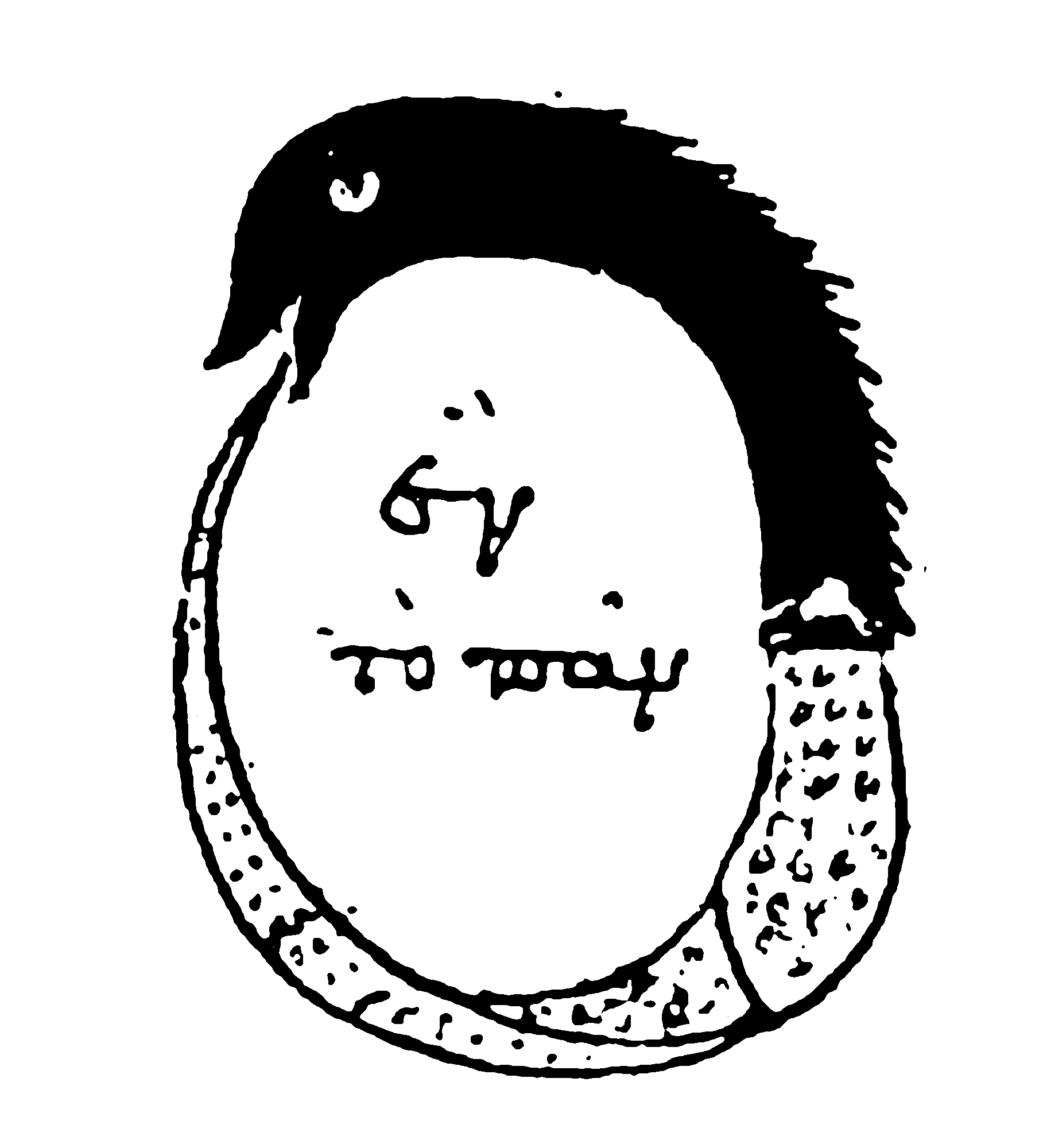

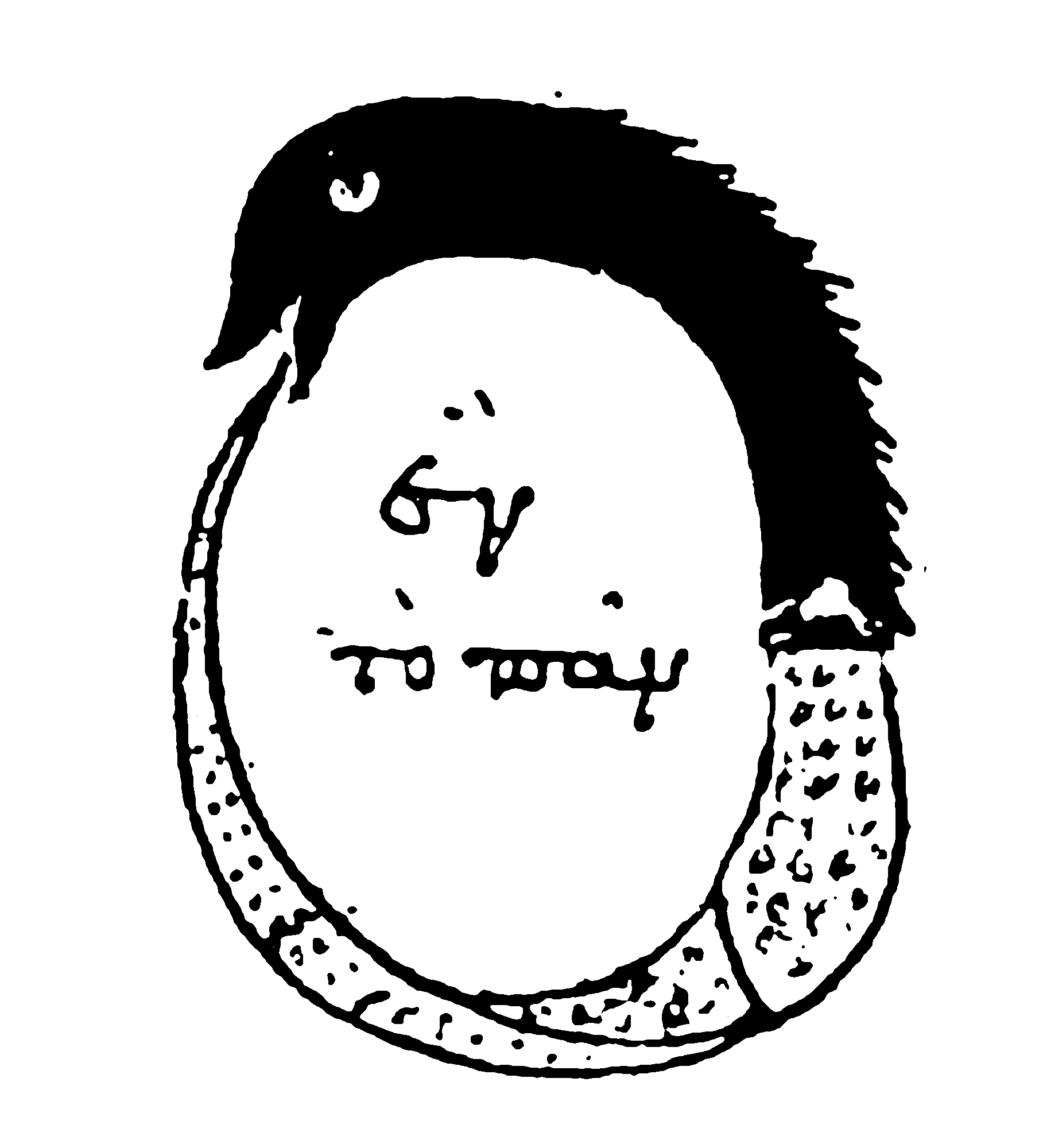

This is the Eye of Horus, the left wedjat eye, it is an ancient Egyptian symbol, more than 5000 years old. The very first time you see it, it will speak to you. You will try to explain it, examine it; without reason.

Horus lost his left eye in a battle with Set, the god of chaos. Later restored by Hermes Trismegistus, Thrice-Great Hermes, also known as Thoth, the god of wisdom, its restoration is considered a triumph of order over chaos. The left wedjat is the symbol of the moon. And since it was healed from wisdom, it became a symbol of healing and renewal. You might notice today on some medications or recipes symbol Rx (℞), it originates from the Eye of Horus, you can see the shape of the R, later it became the symbol of Jupiter, and then the first letter of the Latin word 'Recipere'.

How much cultural experience is packed in this symbol? 5000 years of hope, hundreds of millions of people praying to it every day, teaching their children how to use it, how to draw it..

This is the alchemist symbol of the philosopher's stone. The second Adam.

It represents the evolution of a whole culture, whole societies have been violently transformed because of it. The philosopher's stone, some say, is able to transform any metal into gold. You might think it is manifestation of the infinite human greed, but others believe it is the transformation of the soul. The expression of Anima Mundi, the soul of the world. The world, Plato says, has soul and reason.

Now, pause for a bit and think, is the symbol changing our culture, or our culture changing the symbol.

To understand one symbol means to understand everything.

The word 'sun' is only 3 symbols, and it itself is a symbol, ⬤ is only 1 symbol, however their interpretation is up to you. When you read them, what do you see? I see a sunrise, cycling to work, passing the lake, a burning star, I hear the sound of the wind, I can even smell the air. You might see a sunset, or feel the nnheat, or might even see the moon on a cold night. Information lives in two worlds, outside as a symbol and inside as a dream. Neither world is more real than the other.

A symbol is not merely a group of dots, a sound wave, or a shape. When I write

the symbol for one: l, I am not just making a mark, I am creating a bridge

between the physical and the abstract, or in some cases between two abstract

worlds. This bridge works in both directions: the physical symbol shapes our

mental concept of 'oneness', while our understanding of 'oneness' gives meaning

to the symbol. Also that was not the symbol for one (1), that was small letter

L: l, you made it into one when you thought about it being a number.

The symbols change us and we change their meaning. We interact with symbols in two ways, we can interpret them or evaluate them.

Interpretation is giving meaning to the symbol, for example reading black cat,

you interpret it and imagine a black cat, unless you have aphantasia, in which

case you just think of a black cat without an image.

Evaluation is the process of giving life to symbols. When you see 2 + 2, your

mind doesn't just read characters, it gives them meaning, as it iterates through

ideas and experiences, it produces new symbol: 4, without you even wanting to

do it, I dare you, try to not do it, try to read 2 + 2 and not think of 4.

The symbol's meaning and the process it invokes in you, exists neither in the

symbol nor in your mind, but in their interraction and transformation.

Evaluation of symbols is to execute the symbol, let is live and act, according

to its relationship with everything else.

I am very interested in this particular relationship between the symbols and their observer, or evaluator, especially when the evaluator is symbolic as well.

There is a famous example from Gödel, Escher, Bach: can a record player play all possible records? What about the record that produces vibrations that damage the record player? Can a human think all possible thoughts? What about thoughts that make you inhuman?

In order to continue, I must explain what evaluation is, and what computation is, in its deepest sense, since we, humans, can evaluate symbols, I will try to make you experience symbolic evaluation and transformation.

Lets start with the following sentence:

I am what I was plus what I was before I was.

Before I began, I was nothing.

When I began, I was one.

While reading the words you interpret them, you asign them meaning and understand them. Now lets evaluate them, but I will rewrite the riddle in a different way, even though it means the same thing, it will be a bit easier to write down the process.

F(n) = F(n-1) + F(n-2)

F(0) = 0

F(1) = 1

Surprise! It is the Fibonacci sequence.

Now, lets evaluate it in our head:

0 | 0: Before I began I was nothing

1 | 1: When I began I was one

2 | 1 = 1 + 0 I am what I was plus what I was before I was.

3 | 2 = 1 + 1 I am what I was plus what I was before I was.

4 | 3 = 2 + 1 I am what I was plus what I was before I was.

5 | 5 = 3 + 2 I am what I was plus what I was before I was.

6 | 8 = 5 + 3 I am what I was plus what I was before I was.

7 | 13 = 8 + 5 ...

8 | 21 = 13 + 8 ...

... | ...

50 | 12586269025 = 4807526976 + 7778742049

... | ...

250 | 7896325826131730509282738943634332893686268675876375 = ...

... | ...

Try another one:

This sentence is false.

The previous sentence is true.

You might feel physical pain while evaluating it, if you keep cycling between the statements, deeper and deeper into confusion. Kind of like this optical illusion, white dots appear and disappear, they are there, but they are not.

Experiencing true infinity by just evaluating few symbols. But the infinity is made by you both having vocabulary, and applying the English grammar rules.

Lets deconstruct their grammar:

This sentence is false.

- Main clause: "This sentence is false."

- Subject: "This sentence" (a noun phrase: determiner "this" + noun "sentence")

- Verb: "is" (copula)

- Complement (predicate adjective): "false" (adjective describing the subject)

This is a simple linking structure: Subject + Linking Verb + Adjective.

The previous sentence is true.

- Main clause: "The previous sentence is true."

- Subject: "The previous sentence" (a noun phrase: determiner "the" + adjective "previous" + noun "sentence")

- Verb: "is" (copula)

- Complement (predicate adjective): "true" (adjective describing the subject)

Again, a simple linking verb pattern: Subject + Linking Verb + Adjective.

This sentence is false. The previous sentence is true.

When taken together, these two sentences form an infinite loop:

First sentence: Subject ("This sentence"), Copula ("is"), Complement ("false" - adjective). Second sentence: Subject ("The previous sentence"), Copula ("is"), Complement ("true").

What is a subject, what is a linking verb, what is a noun:

- Subject: The doer or main focus of the sentence.

- Verb: The action word, or in the case of a "linking verb," a state-of-being word (e.g., "is," "are," "was," "were").

- Complement: Information that follows a linking verb and describes or renames the subject. This can be an adjective (predicate adjective) or a noun (predicate nominative).

ChatGPT did the grammar deconstruction, I know almost nothing of English grammar.

Deconstructing the vocabulary:

- "this" - demonstrative determiner/adjective pointing to the current sentence

- "sentence" - noun referring to a grammatically complete unit of language

- "is" - present tense form of "to be", functioning as a linking verb

- "false" - adjective describing a statement that is not true

- "the" - definite article specifying a particular thing

- "previous" - adjective describing something that came before

- "true" - adjective describing a statement that is factual/correct

But where do we stop?

- "demonstrative" from Latin "demonstrativus" meaning "pointing out", "demonstrare" = de- (completely) + monstrare (to show) a word that directly indicates which thing is being referenced

- "determiner" from Latin "determinare" = de- (completely) + terminare (to bound, limit) a word that introduces or modifies a noun

- "adjective" from Latin "adjectivum" = ad- (to) + jacere (to throw) a word that describes or modifies a noun..

- ...

How much vocabulary is needed for the infinity to occur? How much grammar is needed? How can the language's gramatical rules be written in the very language they describe? What about the grammar rule: "A sentence must end with a period.", is it gramatically correct? What if it was "A sentence must end with a period" without the period?

In the same time, when you are reading the sentences you are not thinking about the grammar at all, nor about the vocabulary, nor about the words even. Almost instantly confusion arises from the paradox. I am not even sure you and I are reading the sentence in the same way. This is quite strange is it not? Most people can read this without any trouble:Tihs scnetnee is flase. The perivuos scnetnee is ture., and get instantly into confusion. Somehow words are still readable if the first and last letter are correct. But if we read scnetnee as sentence, then what is actually the symbol of sentence?

I have tricked you a bit. This sentence is false is already a paradox in

itself. If the sentence is false then it must be true, since it claims to be

false, but in that case, it must be false because that is its statement, true,

false, true, false.. Epimenides declared: all Cretans are liars, and he

himeself was a Cretan, and people say he always tells the truth. This paradox is

even in the Bible, Titus 1:12 12 One of Crete's own prophets has said it:

"Cretans are always liars..", but it does not say if whoever declared the

statement is a liar or not. However Crete's own prophet must be Cretan as well.

Now lets try something that requires more steps, so that you can experience the application of logic rules:

S1: The next sentence is true.

S2: The fourth sentence is false, if the next sentence is true.

S3: The previous sentence is true.

S4: The first sentence is false.

We will rewrite it so it is easier to evaluate

S1 → claims S2 is true

S2 → claims (if S3 is true then S4 is false)

S3 → claims S2 is true

S4 → claims S1 is false

If S1 is true:

- Then S2 must be true (by S1)

- If S2 is true and S3 is true, then S4 must be false (by S2)

- S3 confirms S2 is true

- But if S4 is false, it means S1 is true

If S1 is false:

- Then S4 is true (since S4 claims S1 is false)

- If S3 is true, then S2 must be true

- If S2 is true and S3 is true, then S4 must be false

- But we started by assuming S4 is true

Now we are one layer above the grammar and its rules, the sentences themselves have rules, in our case S4 must be false, in order for S1 to be true, which leads to contradiction. But what is the transformation here? The sentences are the same, written on the page, what is being transformed? It is your thought. You are transforming each sentence, from true to false and so on, which is itself changing the rules, since the sentences are their own rules.

This process of evaluating information and allowing it to transform itself is the act of computation.

I am not trying to say that you are a computer, I am trying to show what it means to experience computation. The fact that your brain can compute statements, that does not make you a computer, just as your heart pumping blood, does not make you a pump.

This duality of existence of information, both as its state and as its transformation, both as the actor, and the play, this duality is what we will investigate in this book. The painter and the painting.

Now try to evaluate this Zen Koan:

Yamaoka Tesshu, as a young student of Zen, visited one master after another. He called upon Dokuon of Shokoku.

Desiring to show his attainment, he said: "The mind, Buddha, and sentient beings, after all, do not exist. The true nature of phenomena is emptiness. There is no realization, no delusion, no sage, no mediocrity. There is no giving and nothing to be received."

Dokuon, who was smoking quietly, said nothing. Suddenly he whacked Yamaoka with his bamboo pipe. This made the youth quite angry.

"If nothing exists," inquired Dokuon, "where did this anger come from?"

This is what computation is, the process that gives life to information, allowing it to transform itself. A program is a sequence of computations, and it itself is information.

Notice that in this definition, symbols are not required for computation, but in order for us to manipulate or understand computation, symbols are required.

I read what I write.

Each reading changes what I write next.

Each writing changes what I read next.

By now, you have intuition about what evaluation is, or at least how it "feels" when you are evaluating symbols, however, you were doing it unconsciously. Now we will create a former rule that we want to apply, step by step.

I will show you the most amazing game you have seen, you will not be the

player, you will be the board. Start by writing the following numbers on paper

0 0 0 1 0 0 0.

0 1 2 3 4 5 6 (column indexes, so that I can reference them)

-------------

0 0 0 1 0 0 0

Each round, you write a new row, applying the following rules to each cell.

Look up to the previous row, and check itself and neighbors, in our example on cell 2, on the left you have 0, in the middle is itself, with value 0 and on the right you have 1, Cell 6 has 0 on the left, and we get outside of the board on the right, so we assume 0, same for cell 0, on the left we assume 0, on the right is also 0 (cell 1 is 0 in our example).

The rule is the following:

left,middle,right 111 110 101 100 011 010 001 000

output 0 0 0 1 1 1 1 0

So in our example, if we evaluate the first row, and apply the rules

0 1 2 3 4 5 6

-------------

0 | 0 0 0 1 0 0 0

1 | 0 0 1 1 1 0 0

You can see on cell 2 when you look at row 0, cell 2, on the left it nas 0, on

the right it has 1, so we look in the rules and see 001 gives us 1. and on

cell 3 010 gives us 1. Lets do few more rounds.

0 1 2 3 4 5 6

-------------

0 | 0 0 0 1 0 0 0

1 | 0 0 1 1 1 0 0

2 | 0 1 1 0 0 1 0

3 | 1 1 0 1 1 1 0

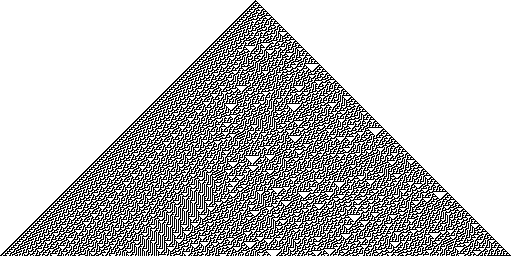

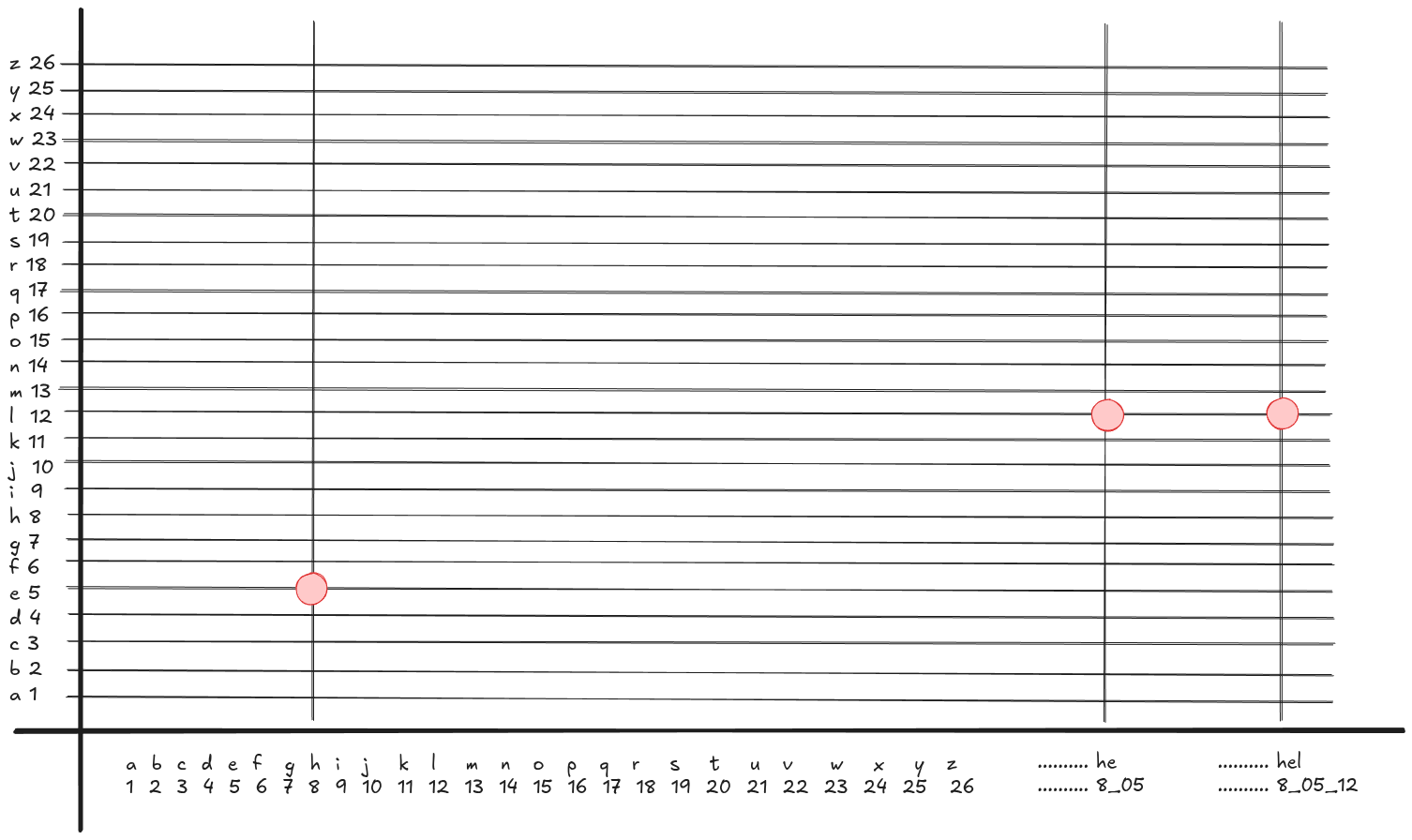

The board is too small to see, but the pattern it creates, is actually amazing.

You can see the rules clearly and also the pattern they generate.

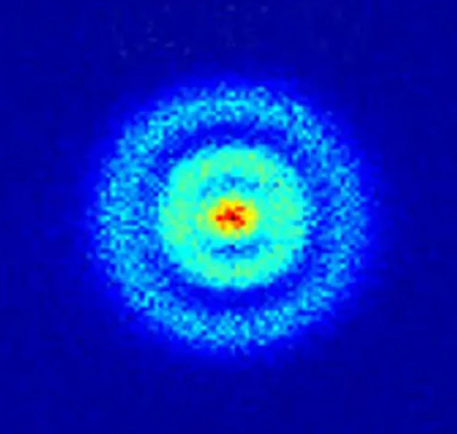

If you create enough columns it becomes this:

There are more games like this, that play themselves, they just need a board to

evaluate the rules. The one we just played is called rule30, and it generates

this interesting shape. The interesting thing is, if our first row is 0 0 0 0 0 0, applying the rules produces another empty row, because 000 outputs 0. So

when looking at an empty page, it might seem there is nothing going on, but underneath, this amazing pattern was hidden.

As I said, a program is a sequence of computations, but in this game, what is

the actuallty the program? Is it the rules, is it the process of applying them,

or the very first row 0 0 0 1 0 0 0? I would argue that the rules are the

program, and 0 0 0 1 0 0 0 is the initial condition, the application of the

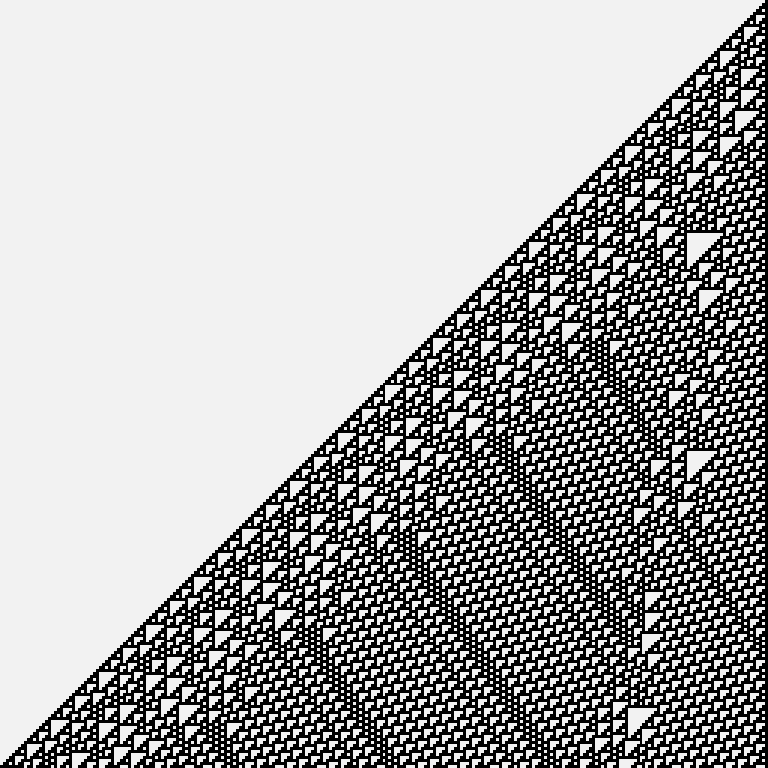

rules is computation. But what about rule 110, the rules change just a tiny bit,

but it has profound consequence.

left,middle,right 111 110 101 100 011 010 001 000

output 0 1 1 0 1 1 1 0

If you run it by itself with 0 0 0 0 0 1 it creates this beautiful pattern

But, if you run it against an infinitely repeated specially crafted background pattern, then rule110 becomes a computer. It still amazes me, the relationship between the background, the rules, and their evaluation. And the process of abstract computation.

There are other zero player games that are computer, if you see them play you might notice how this might work.

Conway's Game of Life is a famous one, it is not one dimentional like rule30 or rule110, which operate row by row, but it is two dimensional, grid based. There are rules about how the cell evolves depending on its neighbours.

- Birth: A dead cell with exactly three living neighbors becomes alive in the next generation

- Survival: A living cell with two or three living neighbors stays alive

- Death by loneliness: A living cell with fewer than two living neighbors dies

- Death by overcrowding: A living cell with more than three living neighbors dies

Those games are real computers, and by that I mean it is a system that can transform information and let information transform itself as it is being evaluated. People are actually writing programs for the game, and I kid you not, this game can run any program that you can run on your computer, or on any other system that we call a computer.

We will get deeper into the topic of computation later. For now, I will leave

you with the confusion of the program that is a game that is a computer. Do a

Life in Life in Life search in youtube if you want to see how it looks.

It seems, the symbols, their interpretation, their evaluation, and their output, all live in separate worlds, and yet, their output can create new symbols, and the symbols can change their evaluation rules, as the rules are also symbolic.

It also seems, that incredibly simple rules, can create infinite complex systems. Including systems that can simmulate themselves, or simmulate worlds.

Now, in case of rule110, what is actually the program? Is it the background? Is it the initial condition? Is it the rule itself? What if we have rule110 written in the background of rule110, so that it evaluates the rules of rule110?

That is what Life in Life in Life does. It is a game of life, inside game of

life, inside game of life.

But you must think above, beyond the rules, beyond the evaluators, beyond the state, but in their relationship, as the rules can change the evaluators, who change the state, and the rules are state as well.

The world, Plato says, has soul and reason.

If you hear a voice within you say you cannot paint, then by all means paint and that voice will be silenced.

-- Vincent Van Gogh

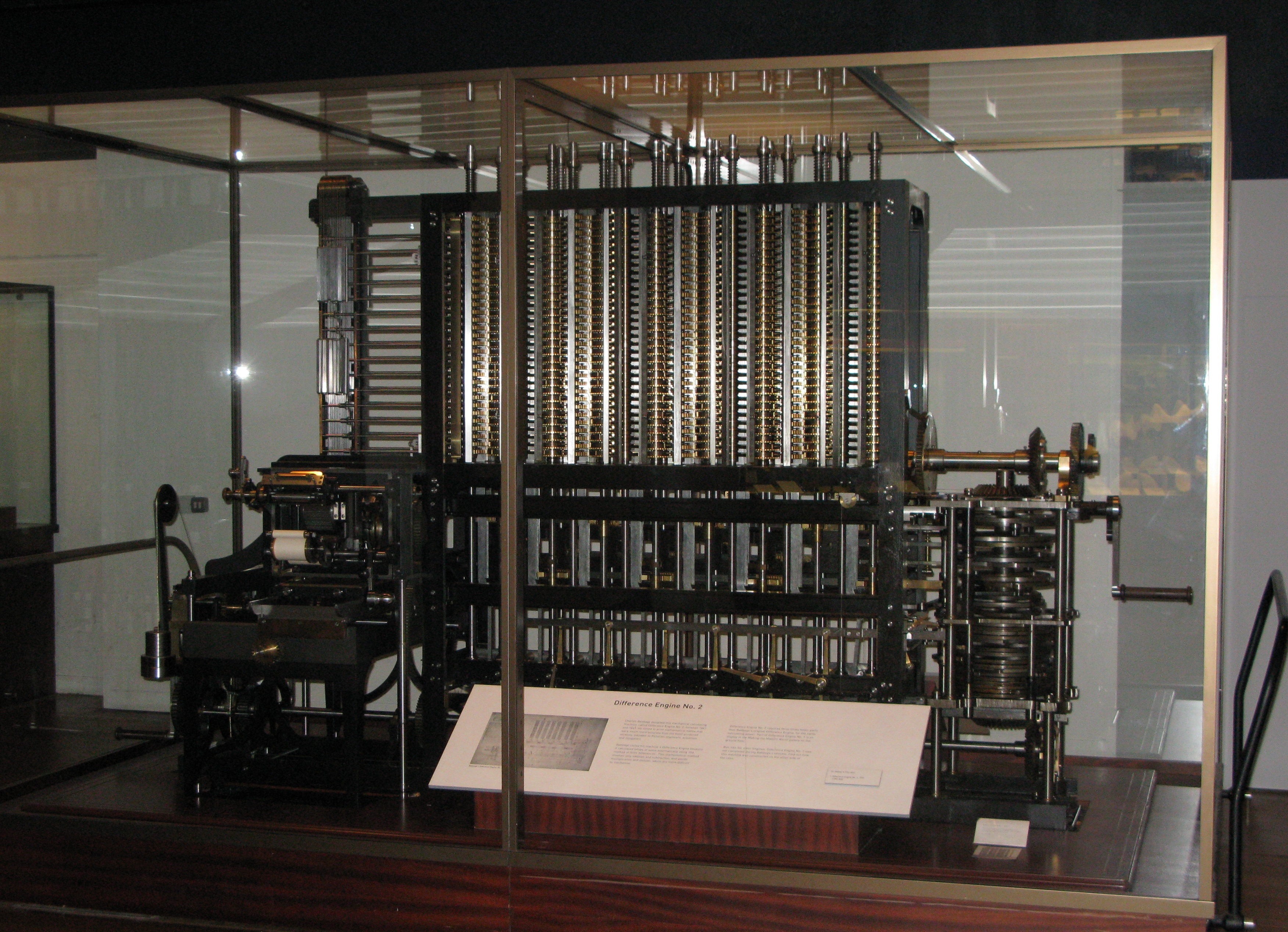

Brief Computer History

Computation is transformation of information, a program is a sequence of computations, and it itself is information.

Not all programs are run by computers.

For example, there are programs in the old looms that were making fabric. They were almost computers, but not quite, and still they could execute program instructions. Or a music box - it has a program, but is not a computer.

You can see the program on the cylinder, each spike is in a particular location. When you turn it, it kicks the metal comb to make a sound. You could say that the computer that executes the program is the universe itself, but it is not the music box.

In order for something to be called a computer, it must be able to store and retrieve information, and use that information to make decisions about what to store or retrieve. In an infinite loop, the choices depend on the information, and the information is shaped by the choices, and of course, choices are information themselves. Any system that has those properties can execute any program ever written, and those that would be written, man-made or not.

There are many kinds of computers: biological, mechanical, emergent, digital, analog and many more. There are computers in every cell in our bodies, in our immune system. Some systems are so complex we don't even know if they are computers, like the weather system, ant colonies, fungi networks, or even the global economy.

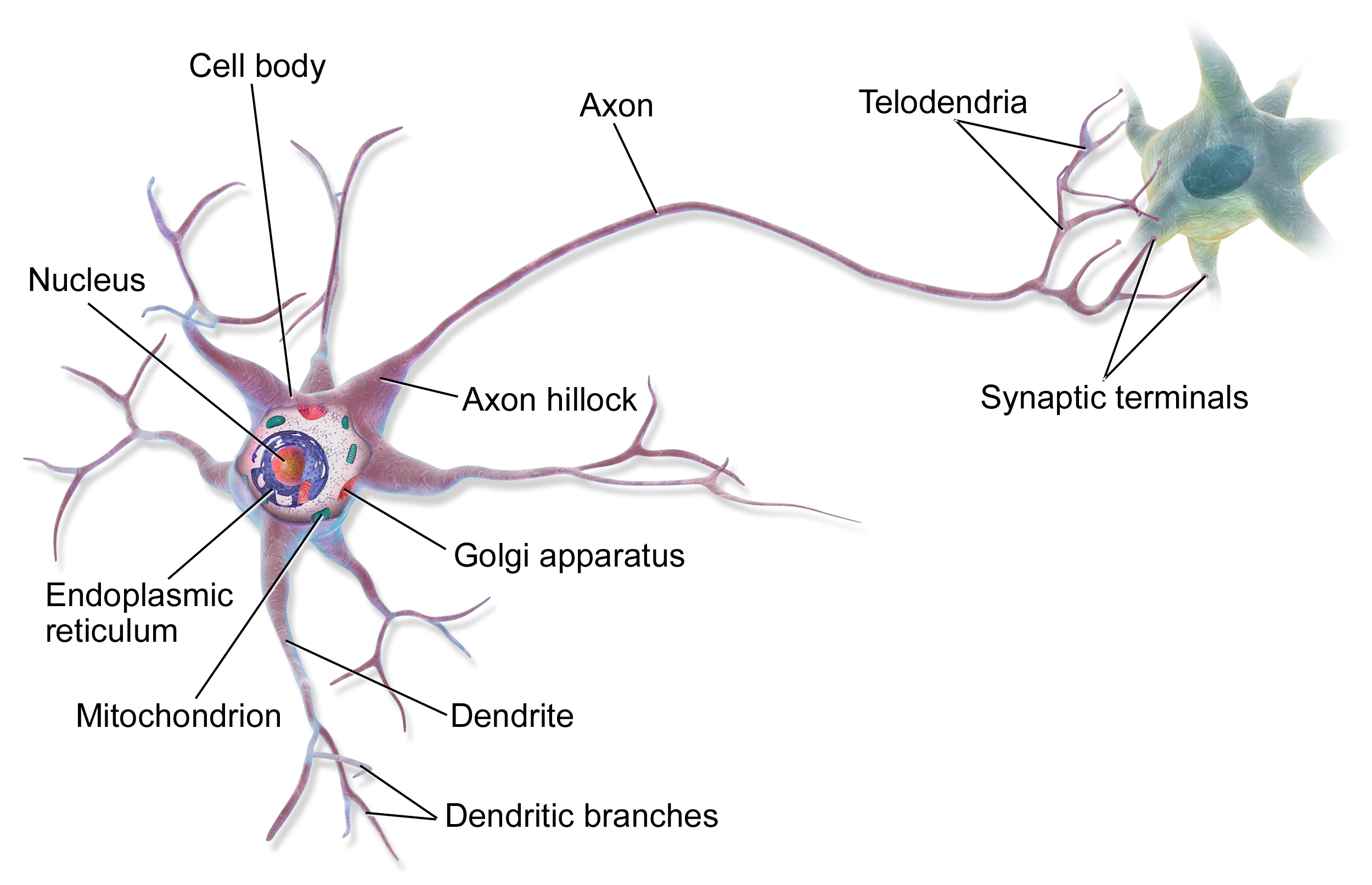

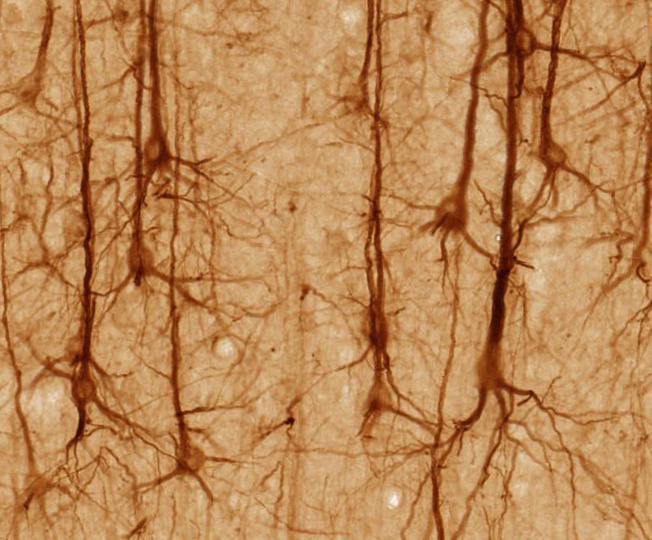

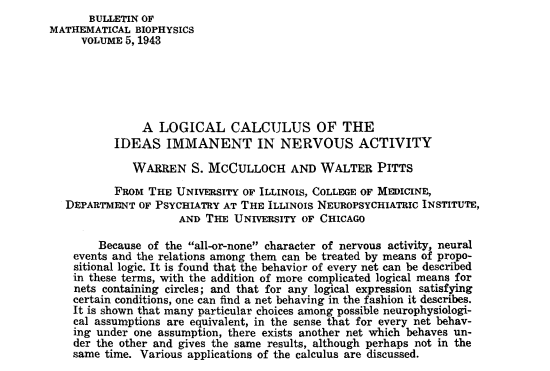

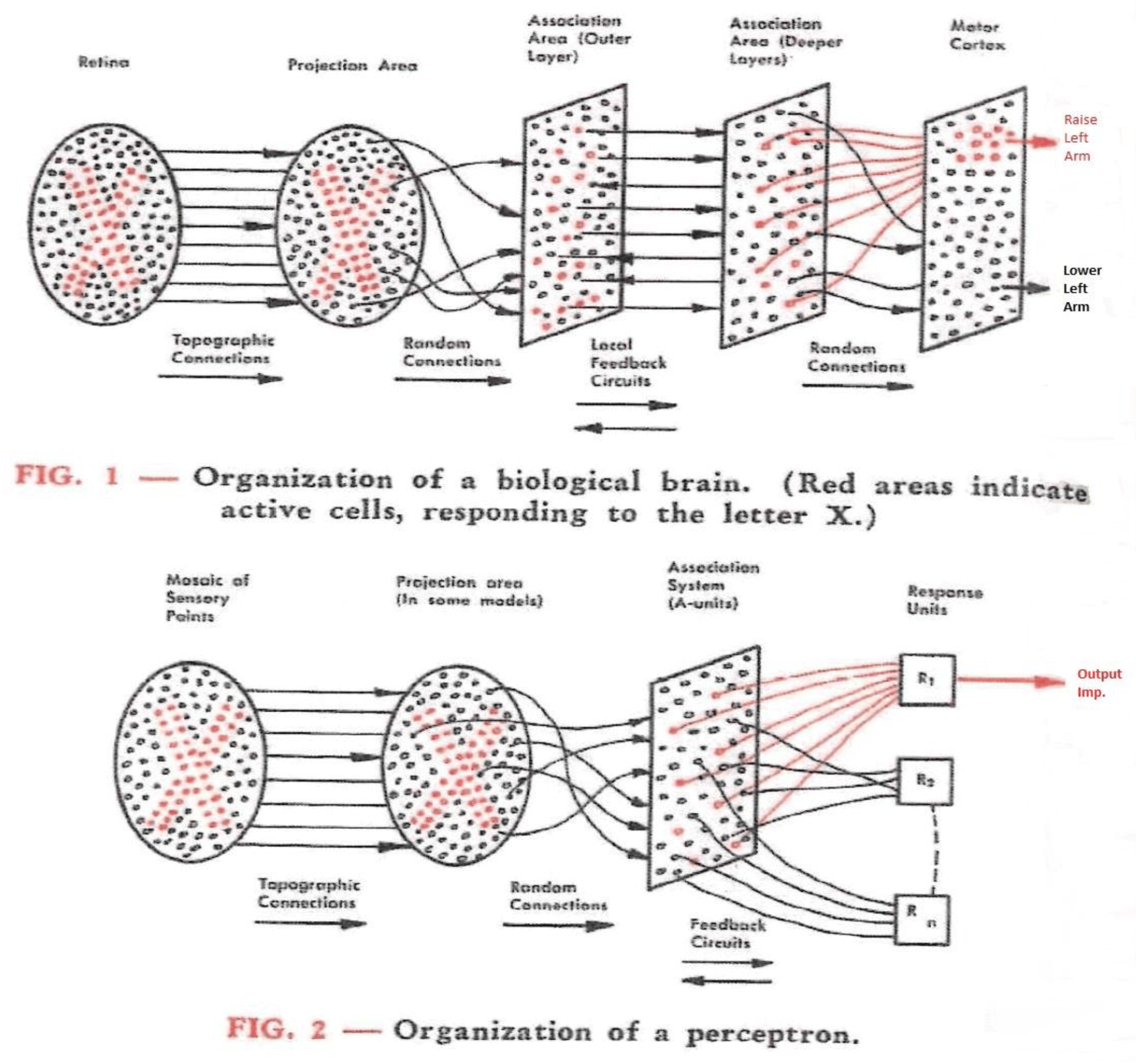

The most interesting computers are those that can write their own programs. They are both the programmer and the program. Deep Neural Networks are such a computer, self-programming machines - the most recent ones are called Transformers, discovered in 2017. It is a machine of many, many layers. Each layer transforms its input to prepare it for the next layer, and in the end, the last layer's output is the first layer's input, forever and ever, in an infinite loop, until its program emits a STOP output. When we train it, it learns how to program the layers so that it can output what we want from it. It does not know right from wrong, truth from lie, it just outputs what its program thinks is needed. Some say that we do not train it, but we grow it, and it trains it self.

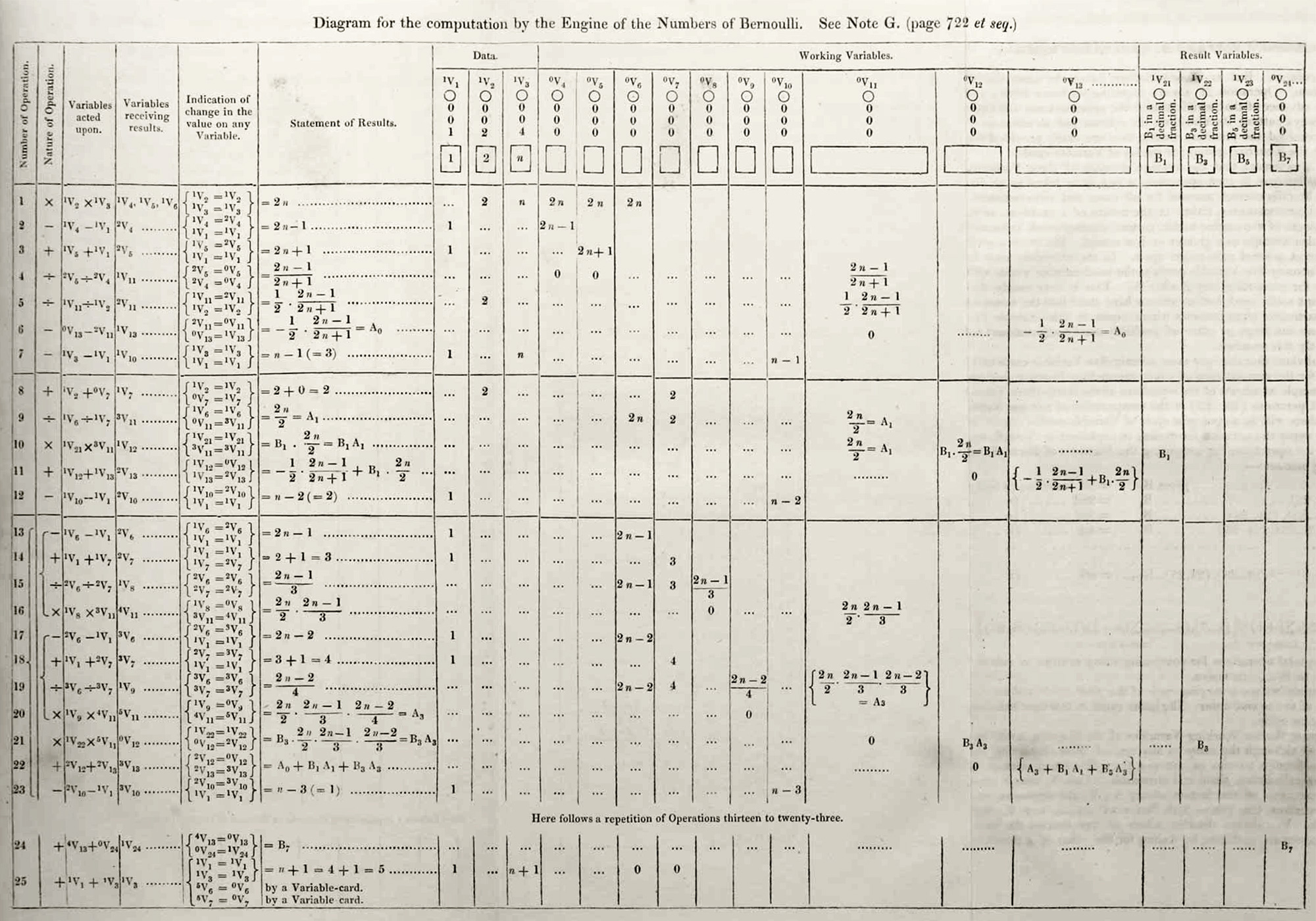

It took humanity millennia to discover the computer. After Charles Babbage in the 1830s, then in 1936, Turing and Church formalized it. Since then, trillions of lines of code have been written, and yet we still do not know how to truly program. Despite the lack of understanding, we managed to create simulacra that are enough to control and empower our digital society. In the modern world, programs control your life. They will work for you, spy on you, teach you, heal you, or physically harm you. At this very moment, programs are deciding who to hire and who to fire, they decide which movie you should watch, and who should be your friend.

For the first time since 1936, we have a glimpse of the next level of computer organization. For the first time, a computer that can do something for you.

To build the new world, you must understand the old. It is imperative to remove the confusion of modern software and understand the digital computer at its core, invent your own language to interact with it, to think from first principles.

A new age is coming, a new way to interact with computers and new ways to program them and a new way for programs to interact with each other.

Take your time, relax and ignore the noise, ignore the design patterns, ignore the programming paradigms, libraries, frameworks and conventions. Reinvent the wheel!

Today most developers have forgotten, and some never knew, what it means to program. And I must tell you, we have not even begun to understand it, not even a little bit.

So first things first, I will tell you how I learn.

What I can not create, I do not understand

-- Richard Feynman

Learning how to learn

Learning new things is a frightening and lonely experience. To learn means to destroy oneself, and be reborn from the ashes. Do not underestimate the courage and sacrifice it requires.

In order to deeply learn something the most important thing is to be honest and humble. Find out what you don't understand. To be honest with yourself is not as easy as you might think, and in fact, a life spent in understanding yourself is a life well spent.

Every single mind is different, we are actually more different than alike, some people cannot hear their thoughts, some can see them, some can't imagine pictures when they close their eyes, some have internal clocks they can measure time. Some people see sounds as colors and others can taste emotions.

Written text, even though it is the best we have, is reducing one's mind into almost nothing in order for us to communicate. What you will read is not what I will write. When we read, half of what we read is from the book to us, and half is from us into the book.

I can only share my experience and how I learn, but I know it is not the same for you.

First I do not care of names, knowing the name of something does not help you to understand it. Just as my name says almost nothing about me. Knowing the name of the curved triangle (had to google it, its Reuleaux triangle) that can make a square hole, has nothing to do with what it does.

The most important thing for me when learning, is to understand what I do not understand, to feel doubt and confusion, and even fear. It feels as if I am in an endless black sea, drowning. Once I get there, I try to sense what exactly got me there, I can look up and see lightnings, and I can follow them back. It is really hard to get there, it is a frightening place to be, and I unconsciously avoid it.

I can never know, even if you tell me, what you feel when you get there, but my advice is, don't run away from it.

There are five ways that I have found to get close to my boundary of understanding, into the doubt:

-

DESTROY- destroy a ball pen, take the ink out, take the ball out, look at it under a microscope, examine it. Do not be afraid. Delete all files on your computer, punch a hole through the hard drive, look inside. Since I was a child, I just broke everything, from my walkman to my sister's barbie doll (I was very interested in how they made the knees to work). Destruction has always guided me, into deeper understanding. It drives my curiosity and my curiosity drives my destruction. -

CREATE- create a programming language yourself, a computer, a game, or a spoon. To create something will give you the deepest understanding of it, and deepest appreciatiation for its existence. -

REDUCE- reduce the thing to its absolute essence and examine it, reduce the computer processor from billions of elements to hundreds. Reduce a polynomial to few symbols. Reduce a multihead transformer to 1 head, remove the layer norms, make it with 2 layers, make it 3 dimensional, with 2 token vocabulary.. keep going until you can do it with pen and paper. Understand the residual flow. -

TEACH- explain why the sky is blue in the morning and red in the evening to a 5 year old child, why the moon is not falling on the earth, why can the moon shadow our sun, why the earth is warm and space is cold? -

QUESTION- Why is it the way it is? What does it actually do? What happens if I do this? How does it work? Do not be embarrased, from others or from yourself, to ask questions, especially those questions you think are stupid. Sometimes I would sense fear to ask a some question to myself because I feel its stupid, I usually get so angry about that, I write the question down and go into the black sea out of spite.

It's important to pay attention to yourself while you are learning, your attitude is important, your gratitude is important, why you are doing it is important. You are changing yourself. New ideas will come, if you listen. Sometimes you will be more lost than before.

If you were to become a leatherworker, you must appreciate the animals that make it and how they live, the scars it has. You must look at it under a microscope, understand why it is the way it is. You must test it, soak it, shape it, and you must know, with every stitch you do, you will grow. Remember the saddle stitch, where one needle goes out, the other needle goes in. Stitch after stitch. A belt has thousands of stitches, 3 millimeters apart. If you give everything you have in each stitch, it will be a good belt.

If you were to become a chef, you must understand chemistry, and how we feel through our tongues, how our molecular sensors vibrate, and how fats, proteins and sugars are changed with heat. How do parasites live, and how to kill them. As everything eaten is transformed into its eater. Respect what you eat and how you cook it. As the chef says: "Everything you do is a reflection of yourself".

If you were to become a blacksmith, understand what does it mean to strike the hammer, hundreds of thousands of times. Pay attention.

There is always doubt in depth.

MAGNUM OPUS.

I have never written a beautiful program, or made a beautiful backpack. My scrambled eggs are really bad, and my welds are worse than my eggs.

When things are hard, and you are lost, and you only see darkness and doubt, remember that its OK, take your time, and be kind to yourself, pray the Ho'oponopono prayer:

I am sorry

Please forgive me

I forgive you

I thank you

I love you

When doing anything, including understanding yourself, this is the right way. I only know how to teach about computers, but everything is the same in its core. Be curious, kind and patient.

Without further ado, I Welcome you to the Cyberspace.

Never found what I was looking for

Now I found it, but it's lost-- Blind Guardian, Valhalla

Electricity

Electricity is the flow of charged particles.

Charge can be positive or negative.

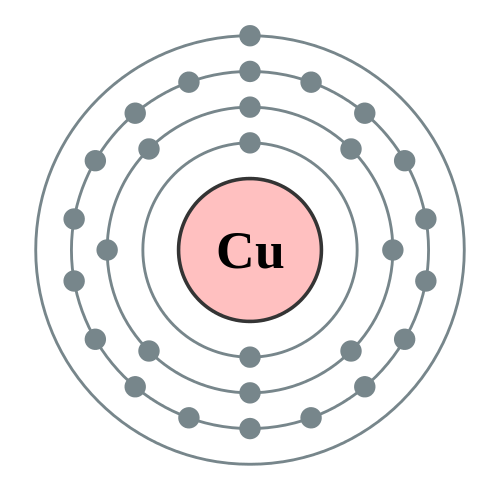

Electrons are one of the 17 fundamental particles of the universe, and for us, the carbon life forms, is possibly the most important one. It is what defines the chemistry that we experience, the materials we build and the way our bodies interact with the world around us. Electrons have negative charge.

Up quarks have 2/3 charge and Down quarks have -1/3 charge.

Protons are made from 2 Up quarks and 1 Down quark, they have positive charge (2/3 + 2/3 - 1/3 = 1). You can see protons are not fundamental, as they are made from quarks, as opposed to electrons which are primitive (as far as we know, not long ago we thought protons are primitive as well).

There are also anti-electrons called positrons, same as electron but with opposite charge, and anti up anti down quarks and so on, they are also fundamental, they are what we call antimatter, we dont have much of it around us in the universe, as it explodes when it interacts with with our matter.

This might sound like nonsense, Up and Down quarks, anti electrons, 17 particles, 1/137 and so on, but, things are the way they are. Absurd. As Terry Pratchet says, living on a disk world on top of 4 elephants, dancing on top of a giant turtle that is swimming through space, is probably less bizzare than quantum mechanics and the standard model of theoretical physics.

Electric current is the flow of electric charge per second, 1 Amp (Ampere) means that 1 Coulomb of charge passess through the point of measure per second.

1 electron has very tiny charge, exactly 0.0000000000000000016 Coulombs, So if you measure 1 Amp in an electric circuit, it means there bazillion electrons passing through. For reference, your laptop's processors runs on about 100 milli amps, or 0.1 amp. Playing music your iPhone about 300000000000000000 electrons cycle through the circle per second.

Some materials make it easy for charge to flow, for example copper or iron, those are called conductors, some make it very hard and resist it, like air or glass, they are insulators, and the most interesting materials are those that can be both a conductor or insulator depending on conditions, we call them semi-conductors. The best ones are those where the condition to make them insulator or conductor is electric charge! So we can have loops where the output of the semi-conductor through complicated structures and paths can feed back into itself and either turn it on or off.

You know how gravity creates more gravity? As in the more mass you have the more gravity, which creates stronger gravitational field, which pulls more mass, which creates stronger gravitational field... and so on. Gravity is unstable. Electricity is not like that, it wants to stop, all it tries to do is to balance itself out. Get to the lowest energy, peace and quiet.

It will always find a way to balance out, sometimes it will surprise you in the paths it finds, it will go back on your wires, or leak or jump, so you have to think carefully, or it will trick you.

We will discuss electricity again in the book, but I suggest you watch Veritasium and styropyro's youtube videos on the subject.

Our computers run on electricity, and all of them use moving electrons. We have discovered how to make reliable semiconductors from Sillicon and Boron/Gallium/Indium, that we can control with electricity. This technology has unlocked the computer revolution.

I learned very early the difference between knowing the name of something and knowing something.

-- Richard Feynman

Gates and Latches

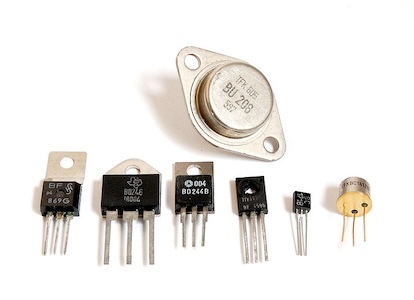

From semiconducting material we have built the Transistor, which is the building block of modern electronics. An electrically controlled switch. It is one of the greatest inventions of mankind, right there with language, and the neural network model of the brain, fire and sliced bread.

It has 3 legs, their names are somewhat weird: Collector, Base, Emitter, but don't worry about the names, the point is, when we apply current through the base (the middle leg), electricity can flow from the collector to the emitter. It is a switch that we can control with electricity.

We can make transistors that are just 10 nanometers in size and connect billions of them into circuits that we use to compute or store information. There is research in Berkeley that actually created a working 1 nanometer transistor, the Oxygen atom is "about" 0.14 nanometers (the quotes around about are due to the absurdity of quantum mechanics, and the experimental fact that atoms do not actually have "size").

A very useful circuit with switches is the NAND gate:

S1 and S2 are switches that we control with our input X and Y, R is a resistor, and we are interested in the output at point Q.

You can see that when both S1 and S2 are open, meaning X and Y are 0, then on Q we have 1, when you close S1 or S2, again + is not connected to ground, so at Q we have 1, but when we connect both S1 and S2 then there is a path from + to - and we have no voltage at Q, so it reads 0.

Where 1 means that current goes through and 0 means it doesn't.

We can put this statement in a table:

| X | Y | Q = NAND(X,Y) |

|---|---|---------------|

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

This table is called "truth table". so Q is NAND(X,Y). NAND means NOT AND, in contrast with the AND truth table, where we get 1 only if both inputs are 1, :

| X | Y | Q = AND(X,Y) |

|---|---|--------------|

| 0 | 0 | 0 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

This is the OR table, where the output is 1 when either of the inputs is 1:

| X | Y | Q = OR(X,Y) |

|---|---|-------------|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 1 |

This is the NOR table, where the output is 1 only when both inputs are 0:

| X | Y | Q = NOR(X,Y) |

|---|---|--------------|

| 0 | 0 | 1 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 0 |

XOR means eXclusive OR, and the output is 1 when the inputs are different:

| X | Y | Q = XOR(X,Y) |

|---|---|--------------|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

We can construct all the other truth tables by various combinations of NAND gates, for example

AND(X,Y) = NAND(NAND(X,Y),NAND(X,Y))

or we can write it as

AND(X,Y) = NAND(A,A) where A is NAND(X,Y)

Lets test this, just think it through.

| X | Y | Q | Q = NAND(NAND(X,Y),NAND(X,Y)) |

|---|---|---|----------------------------------------|

| 0 | 0 | 0 | A = NAND(0,0) is 1, NAND(A=1,A=1) is 0 |

| 0 | 1 | 0 | A = NAND(0,1) is 1, NAND(A=1,A=1) is 0 |

| 1 | 0 | 0 | A = NAND(1,0) is 1, NAND(A=1,A=1) is 0 |

| 1 | 1 | 1 | A = NAND(0,0) is 0, NAND(A=0,A=0) is 1 |

So you can see we made AND truth table by using NAND.

Those gates are the very core of our digital computers. Note, you dont need electricity to create gates, there are there are gates that appear naturally from the laws of physics, People make gates from falling dominos, of from dripping water.

You can get more information from wikipedia or various pages on the internet, if you search for NAND gates. You can of course make a NAND gate with Redstone in Minecraft, and thats how people build digital computers within Minecraft.

- https://en.wikipedia.org/wiki/Transistor

- https://en.wikipedia.org/wiki/NAND_gate

- https://en.wikipedia.org/wiki/NAND_logic

- https://minecraft.fandom.com/wiki/Redstone_circuits/Logic

- https://www.gsnetwork.com/nand-gate/

Now we get into the real meaty part, actually storing 1 bit of information in a circuit!

This circuit is called an SR Latch, for Set-Reset Latch.

The big round things in the middle are NAND gates, Q is the output and Q the inverted output (when Q is 1, Q is 0), we wont care for it, but its in the diagram for completeness. The bar on top of the letter means 'inverted'.

S is again, the inverse of S, and R is the inverse of R.

This feedback loop, where BQ feeds into A and AQ feeds into B creates a circuit that can remember.

(showing the NAND truth table again so we can reference it)

| X | Y | Q = NAND(X,Y) |

|---|---|---------------|

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

The SR Latch has 4 possible configurations, called Set Condition, Reset Condition, Hold Condition and Invalid Condition.

The Set Condition forces the latch to remember 1, Reset forces it to remember 0, and Hold makes it output whatever the previous value was.

Set Condition (S = 0, R = 1)

Gate A:

- AX = S = 0

- AY = Q (from Gate B)

- Since AX = 0, the NAND gate outputs Q = 1 regardless of AY

- AQ (Q) = 1

Gate B:

- BY = R = 1

- BX = Q = 1 (from Gate A)

- NAND(1,1) = 0

- BQ (Q) = 0

OUTPUT: Q = 1 (latch is set)

Reset Condition (S = 1, R = 0)

Gate B:

- BY = R = 0

- BX = Q (from Gate A)

- Since BY = 0, the NAND gate outputs Q = 1 regardless of BX

- BQ (Q) = 1

Gate A:

- AX = S = 1

- AY = Q = 1 (from Gate B)

- NAND(1,1) = 0

- AQ (Q) = 0

OUTPUT: Q = 0 (latch is reset)

Hold Condition (S = 1, R = 1)

Assuming previous state Q = 1, Q = 0:

- Gate A: AX = S = 1, AY = Q = 0

- Since AY = 0, the NAND gate outputs Q = 1

- AQ (Q) = 1

- Gate B: BX = Q = 1, BY = R = 1

- NAND(1,1) = 0

- BQ (Q) = 0

- OUTPUT: Q = 1 (latch holds previous state)

Alternatively, if previous state Q = 0, Q = 1:

- Gate A: AX = S = 1, AY = Q = 1

- NAND(1,1) = 0

- AQ (Q) = 0

- Gate B: BX = Q = 0, BY = R = 1

- Since BX = 0, the NAND gate outputs Q = 1

- BQ (Q) = 1

- OUTPUT: Q = 0 (latch holds previous state)

Invalid Condition (S = 0, R = 0)

This forces both Q and Q to be 1, which is invalid, as Q has to be the inverse of Q.

In the Hold Condition the outputs of the gates depend on their own previous outputs, creating a stable loop.

The latch remembers! The bit is stored in the infinite loop.

The SR latch is extremely fundamental building block for memory, it shows how we can store a bit of information indefinitely as long as there is power.

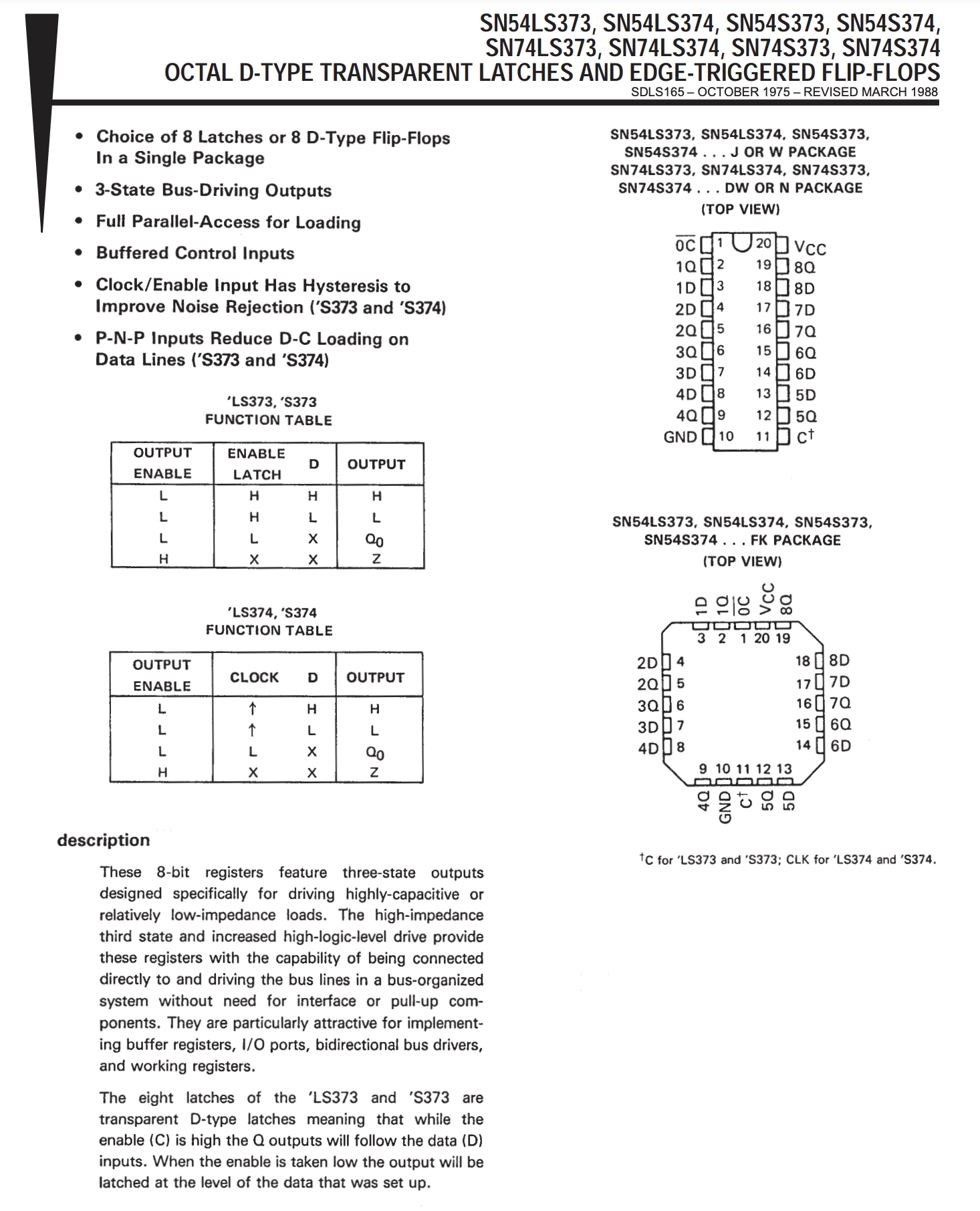

Another fundamental building block is the Data Flip-Flop (D Flip-Flop) circuit, which reads the Data at a clock pulse and remember is. They allow for creation of registers, counters, shift registers and memory elements.

They are more complicated, but basically it allows you to remember the Data value (0 or 1), when the Clock signal is rising. It is called an edge triggered D flip flop. But you can notice the 'latches' inside, those infinite feedback loops are what makes the circuit remember.

I won't go into more detail, but this is by no means an introduction to electronics, nor gates, nor latches, as a lot more goes into it, in practical and theoretical aspects, but it is enough for you to ask questions and have some sort of a mental model about what a 'bit' means in the computer.

If you want to investigate the subject further I suggest:

- Practical Electronics for Inventors

- But How Do It Know? The Basic Principles of Computers for Everyone

- Art of electronics

- The Elements of Computing Systems: Building a Modern Computer from First Principles

- Ben Eater

- ElectroBOOM

- I made a Minecraft in Minecraft with redstone

- Flip Flop

- SR Latch

Who looks outside, dreams; who looks inside, awakes.

-- Carl Jung

Memory

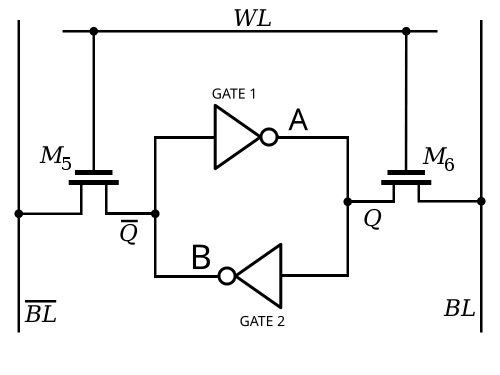

Now you know how to store 1 bit with a latching circuit, there is another configuration using 6 transistors to form the infinite loop, called "6T SRAM cell", that makes it easier to build a huge array of cells and allows us to access the data.

This is how a cell looks:

The picture looks complicated, but the idea is the same as the Flip Flop and SR Latch loops. The circuit guarantees that as long as there is power, it will remember.

In order to read the picture I will have to explain a bit more about the transistors. There are many kinds of transistors, but their purpose is the same, to be an electrically controlled switch. The way they work is by opening or closing a channel in which electrons can flow.

The ones we were discussing previously are usually NPN transistors, but for memory we use MOSFET transistors, which are Metal Oxide Semiconductor FET (Field Effect Transistor). Anyway, the names are not important, the idea is important.

There are two kinds of MOSFETs, NMOS and PMOS, both have 3 legs, but they have different names than the NPN transistors. The MOS legs (I am not even sure if we should call them legs, since we make them so tiny that they are few atoms in size) - I can't overstate the amount of progress we have had in this area, and I am actually afraid that we will forget how to make them. Anyway, the PMOS and NMOS's legs are called Gate, Source, Drain.

There are hundreds of videos on youtube that explain how they work, Electro BOOM made a video recently as well, please check it out before you continue, its just 20 minutes or so and its really good.

In the memory cell, M2 and M4 are PMOS, you can see they have a small circle on their gate, and M1 and M3 are NMOS.

PMOS:

- It turns ON when its gate voltage is

LOWERthan its source voltage - It turns OFF when its gate voltage is

HIGHERthan its source voltage

NMOS:

- It turns ON when its gate voltage is

HIGHERthan its source voltage - It turns OFF when its gate voltage is

LOWERthan its source voltage

You see the on M5 and M6 (both of which are NMOS), the Source and Drain actually depend on which side the voltage is, which depends on the value of the inner loop between M1, M2, M3 and M4.

We will zoom in on M3 and M4:

When the input is LOW: The PMOS transistor (M4) turns ON; The NMOS transistor (M3) turns OFF; The output Q is pulled up to VDD (HIGH).

When the input is HIGH: The PMOS transistor (M4) turns OFF; The NMOS transistor (M3) turns ON; The output Q is pulled down to ground (LOW).

This is just a NOT gate, whatever we have as input, the output is the inverse.

So, lets think about our memory cell in a bit more simplified way. It is just a loop of NOT gates.

The symbol for a NOT gate, also called an inverter, is a triangle with a circle.

Now, follow the loop, if Q is HIGH the output from GATE1 is LOW, so Q is LOW, and then the

input to GATE2 is LOW, so its output is HIGH

If Q is LOW the output from GATE1 is HIGH, so Q is HIGH, and then the

input to GATE2 is HIGH, so its output is LOW.

This is the crux of the memory loop, two CMOS inverters in a loop, or two NOT gates in a loop, same thing.

Now lets talk about how are we going to read or write from the inner cell. After all we want to store many many bytes of data, and the cell is only 1 bit, so we have to organize a whole array of cells into a structure that makes it possible to read multiple in the same time.

First lets check the WL (Word Line), you see that when its LOW M5 and M5 are OFF

so nothing happens, we dont touch the inner cell, it is isolated from BL (the

bit lines), and it is storing its value in the infinite loop of the not

gates. Which is quite poetic BTW, infinite denial stores the bit. Whatever the

value was it stays like that, so if Q is 1 Q is 0 and vice versa. As long as

VDD exists this state is mantained.

If we want to read, we must set the Word Line to HIGH, both BL and BL are

'precharged' to HIGH, meaning they are HIGH before the Word Line is HIGH. At the

moment that WL is set to HIGH, depending on the value of the inner cell, one of

the bit lines will be pulled LOW. If Q = HIGH then BL will be HIGH and Q will

be LOW so BL will be LOW. And if Q = LOW, BL will be LOW, and Q is HIGH

which pulls BL HIGH. A special circuit called sense amplifier can detect this

effect.

I wont get into detail why precharging is needed, as it is beyond the scope of the book, but I encourage you to investigate it.

Writing is very similar to reading, but instead of sensing the change in BL and

BL, they are set to the value we want, so to write 1 we set BL to HIGH and

BL to LOW, to set 0 we set BL to LOW and BL to HIGH, and once WL is HIGH

the bit is stored in the inner cell.

Don't panic if you don't get all this LOW and HIGH business. Draw the

circuit on paper and follow it with a pen, or even better, just take a pen and

write on this book. Follow the lines, imagine water flowing through and think

about the transistors as valves that turn it on or off.

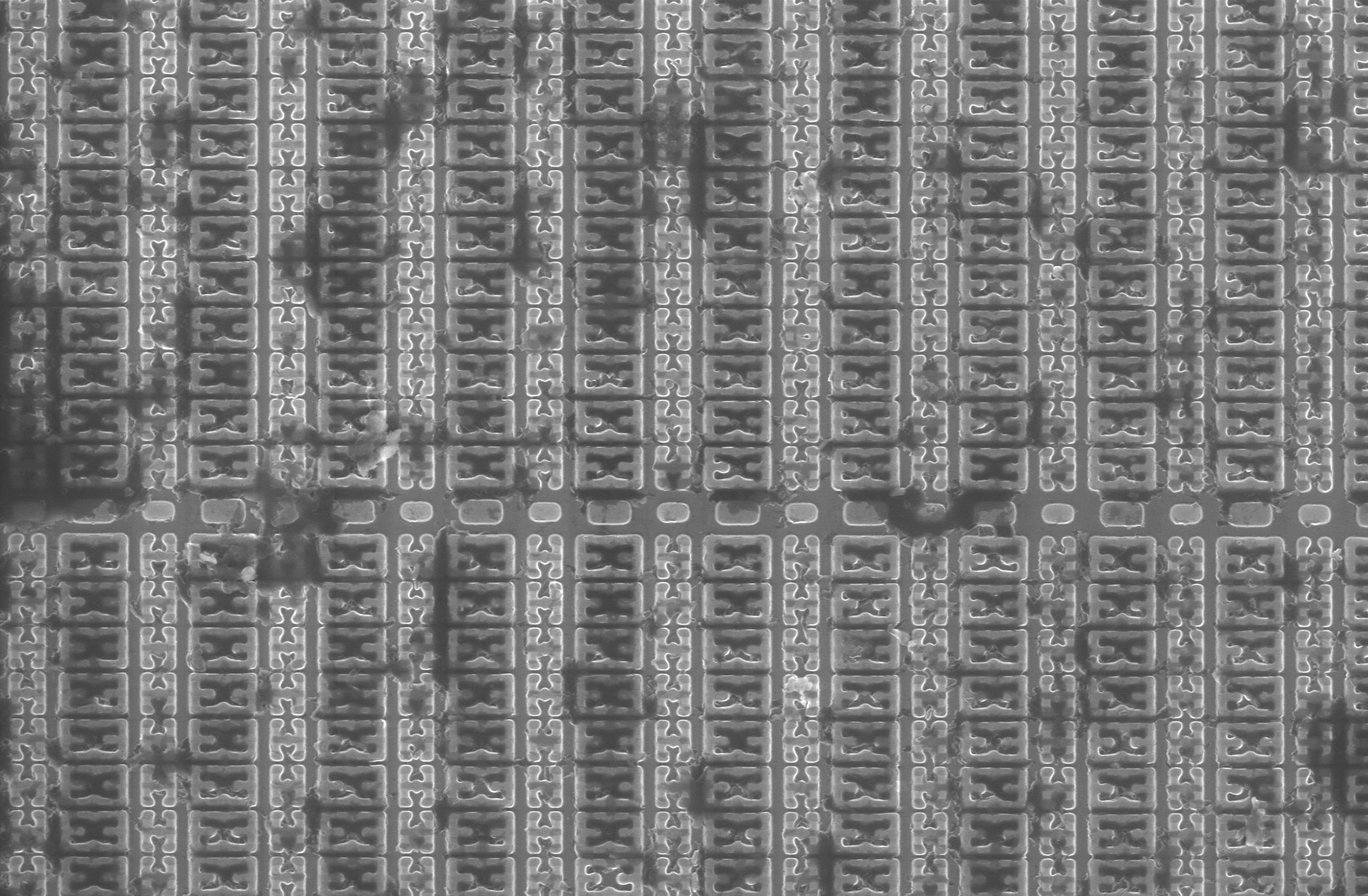

This is how an organization of cells looks like in the real world:

Or as a diagram:

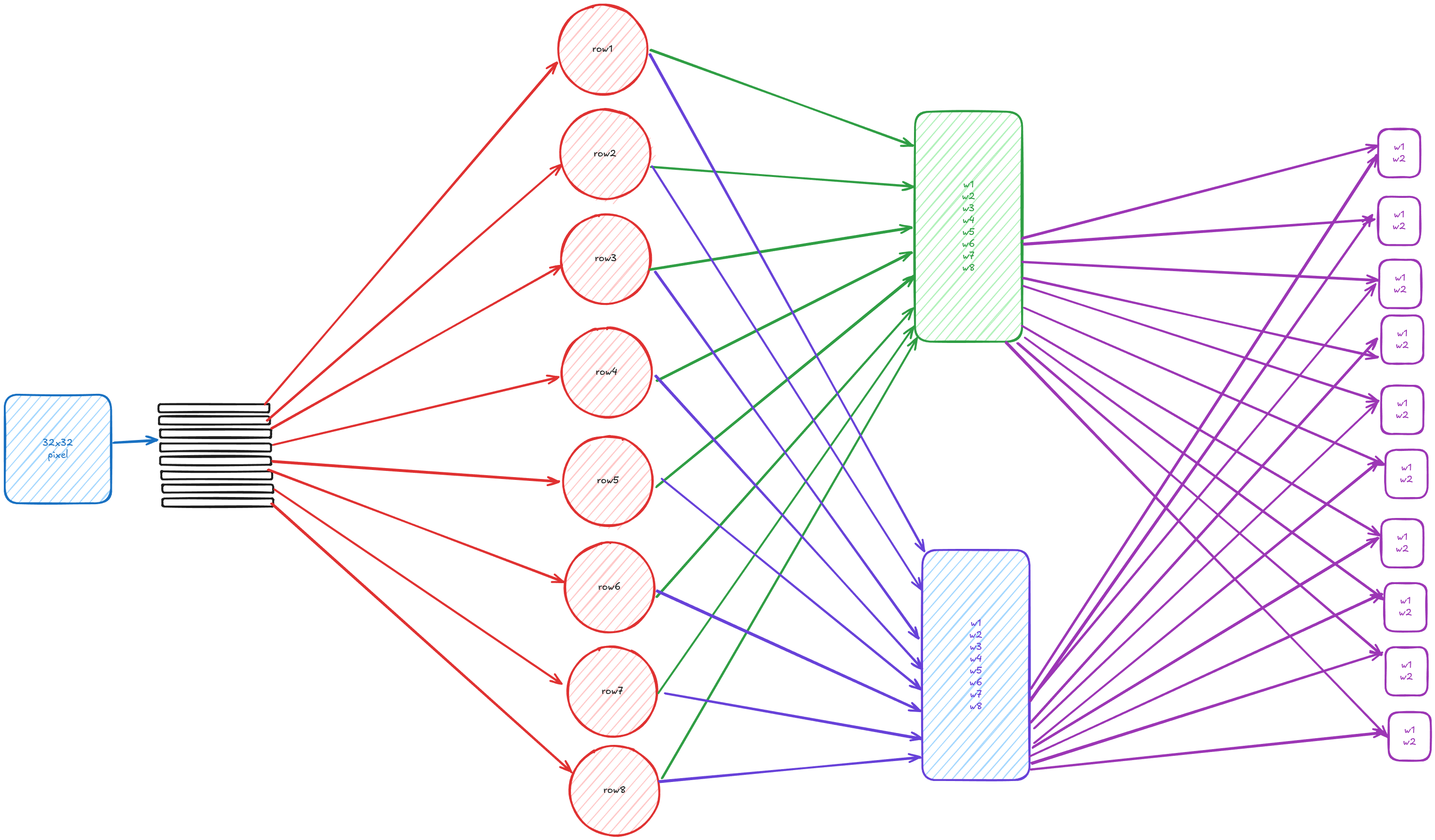

We make a grid of cells, there is a Row Decoder and a Column Decoder and Sense

Amplifiers. The row decoder controls the Word Line, and the column decoder the

bit lines. Only one word line can be HIGH at a time, while multiple bit lines

can be active from the column decoder, and by active I mean it connects them to

the sense amplifier or the write drivers (circuits that force the state on BL

andBL).

On our diagram we have 8 x 8 cells, so in total we have 64 bits of memory,

Imagine we want to write the value 0 at the purple inner cell, it is at location

ROW: 3, COL: 4, we want the row decoder to disable all other Word Lines besides

the one at row 3, and we want the column decoder to enable the write driver at

column 4, and set the BL to LOW, and BL to HIGH on this column. Now if you

follow the lines you see that since no other word line is enabled, only our

purple cell will get set to 0.

We actually want to give the number 3 to the row decoder, which is 0011, and

the number 4 to the column decoder, which is 0100, and they should enable the

right lines. So there are 8 cables going into the memory if we set them to

LOW LOW HIGH HIGH LOW HIGH LOW LOW, or 0011 0100, then from

the output of the memory we will read the value of the purple cell. This is what

a memory address is. It is literally its row and column position. In our case

the decimal number of 00110100 is 52, so our bit is at address 52.

This kind of memory is called RAM, or Random Access Memory, because you are allowed to read and write to any address. It is also called volatile memory, because once the power goes down, the data disappears.

There are many kinds of RAM, the one we discussed is SRAM, or Static RAM, because as long as there is power data is stable, there is also DRAM which has to be refreshed every few milliseconds to keep the data.

You can see in our example that when we enable the word line we can actually

write or read all the value of the row, thats why the word line is called a

word line, a word is the natural unit of data that the processor can work

with. In different systems they have different values, in the past we had

systems with 8, 12, 16, 18, 21 .. bit words, now almost everything 32 bit or 64

bits. That is why in C the size of int is defined in the standard as minimum 2

bytes and maximum 4 bytes.

There are much more complicated organizations, but that is beyond our scope, if you are interested search for DRAM, NAND flash memory, FRAM.

But the real question is, why would we want to address individual bytes or bits? Do programs need addressable memory? After all most of the things we do are sequences, for example this text, is read and written as a sequence of characters. The laws of physics are updated sequentially, in a smooth continous flow of communication through bosons, nothing is abrupt, so why would want to randomly access the purple bit 53 for example?

Lets look at this program:

That which is in locomotion must arrive at the half-way stage

before it arrives at the goal.

-- Aristotle, Physics VI:9

Lets say we want to travel a distance of 2 meters, before we get there we surely must travel 1 meter, and before we get there we must travel half a meter, .. and so on.. before we travel 0.0001 meters we must travel 0.00005 meters..

And so, when we evaluate the program in our head, it seems like nothing should move, because it will infinitely get the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half of the half...

Now imagine we want to follow 10 people, and we have to remember each person's half, so that we can compute its half, we must "look up" the previous value. How do you imagine keeping track of all the halves when people complete them at different time?

What about this program:

copy this sentence below

Amazingly the program writes more of itself:

copy this sentence below

copy this sentence below

copy this sentence below

copy this sentence below

copy this sentence below

copy this sentence below

In order to do that its evaluator must know where it ends, and where is 'below'.

copy this sencence below, then delete the sentence above

after few interations we get:

........................................................

........................................................

........................................................

........................................................

........................................................

copy this sencence below, then delete the sentence above

Look again at this program:

I am what I was plus what I was before I was.

Before I began, I was nothing.

When I began, I was one.

When we executed the values "slide" through memory,

0 | 0: Before I began I was nothing

1 | 1: When I began I was one

2 | 1 = 1 + 0 I am what I was plus what I was before I was.

3 | 2 = 1 + 1 I am what I was plus what I was before I was.

4 | 3 = 2 + 1 I am what I was plus what I was before I was.

5 | 5 = 3 + 2 I am what I was plus what I was before I was.

6 | 8 = 5 + 3 I am what I was plus what I was before I was.

7 | 13 = 8 + 5 ...

8 | 21 = 13 + 8 ...

... | ...

50 | 12586269025 = 4807526976 + 7778742049

... | ...

250 | 7896325826131730509282738943634332893686268675876375 = ...

... | ...

You see "before I was" is just CURRENT ADDRESS - 2, but this could be at address 1024, then when you say again "before I was" it is at address 1032, so the "before I was" moves as the program is evaluated.

You see how natural it is to be able to refer to the information's location, for example knowing where is 'below' or 'above', or knowing where you stored the half of the half, so that you can take its half.

There is subtle difference between infinite half of the half (1) for 10 people and I am what I was plus what I was before I was (2).

-

Feels more like a filing cabinet, where you just need to find the value of the previous half, and then replace it with the new value. Updates are abrupt, first person 7 passes their half, then person 3, then person 8.

-

Feels more like a river carrying data with it. Things only communicate/interact with their surroundings. One thing leads to the next and so on. Maybe a better example is lyrics of a song, for me it is really hard to sing a song from the middle, but have no issue to sing it from start to finish otherwise.

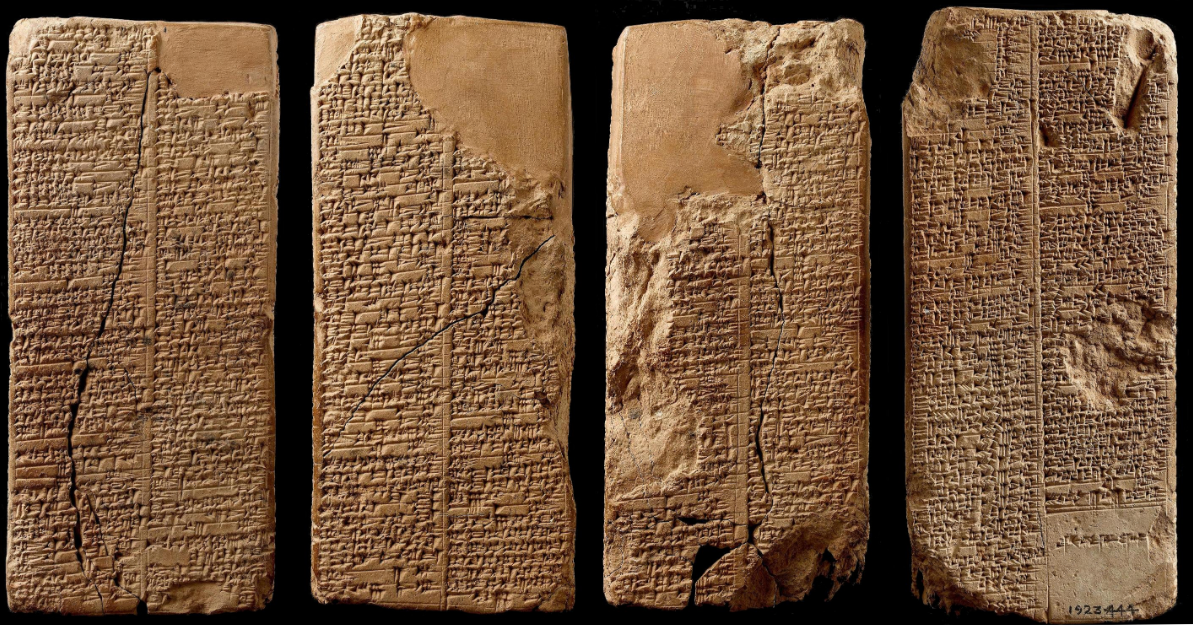

I don't know why, but we seem to think with addressable memory, It is much easier to express our complex ideas by storing information in places and be able to look it up and change it. Since Gilgamesh and Enkidu of Uruk, and possibly even before that, 4000 years ago, we know that the people of Sumer were making lists, storing and indexing information.

This is the list of kings:

In Ur, Mesannepada became king; he ruled for 80 years. Meskiagnun, the son of Mesannepada, became king; he ruled for 36 years. Elulu ruled for 25 years. Balulu ruled for 36 years. 4 kings; they ruled for 171 years. Then Ur was defeated and the kingship was taken to Awan...

Even today in the modern office you will see everything is indexed in file cabinets and folders with labels, our TV channels, our houses, our book pages are numbered and addressable, books even have inverted indexes of which information is on which page, which company is at which address, etc. The principle is the same as the sumerian king list, which year did which king rule, which king ruled how many years.

When you think of ways how to track the 10 people's halves, you intiuitively imagine all kinds of devices, like boxes, or pages, or you can just "remember them", but think for a second, what does "remembering them" mean, it means when runner number 1 gets to their half you have to conjure the previous half divide it by 2 and then remember the new value. If you build a system with pages, e.g. runner 1 is on page 1, runner 2 on page 2, etc, and runner 1 reaches the half, you just open to page 1, read the current value, halve it, and write the new value.

Again, we "think" with addressable memory. Today, programming languages that allow direct memory manipulation, and the ability to label memory, are vastly more popular than the ones that don't, that of course does not make them better or worse, just different.

There are stack computers for example, that do not have a concept of an address, and are just as powerful. Or neural network computers, where the program and its memory is in the interaction strengths between the neurons. In biological or chemical computers it seems the information is stored and retrieved in potential energy and the emergent structures because of it. There are also graph computers, quantum computers, and so on.

But for us, human beings, it seems it is easiest to express ourselves by mutating (changing) memory.

OK, now things are going to get crazy, I will show you how powerful addressable memory is, and how we can build very simple universal computers with it.

Just with addressable memory, subtract and if we can build universal

computer. Our computer will be able to do only 1 thing, given 3 numbers, A,B,C

it will subtract the value at location B - value at location A, store the result

back in location B, and if the result is less or equalt zero, move to location

C, if not continue to execute the next location.

This language is called SUBLEQ (SUBtract and branch if Less than EQal to zero) is possibly the simplest one instruction language.

This is a pseudocode of what it does:

PC = 0

forever:

a = memory[PC]

b = memory[PC + 1]

c = memory[PC + 2]

memory[b] = memory[b] - memory[a]

if memory[b] <= 0:

PC = c

else:

PC += 1

PC means Program Counter, it is just a bit of memory where track where exactly

are we in the program and what instruction we should execute, like your finger

keeping the book open when you want to remember which page you are

at. memory[a] means the stored value at address a, which itself mean

particular row and column in the grid of CMOS circuits, or if the memory was a

book, and our values were whole pages, a will be the page number. If the

memory was a street with houses, then a will be the street number, and inside

the house at a will be the value at this address.

Examine the following program: 7 6 9 8 8 0 3 1 0 8 8 9, looks a bit scary, but

let me rewrite it in a grid, on each call you see the value and its address.

| 70 | 61 | 92 |

| 83 | 84 | 05 |

| 36 | 17 | 08 |

| 89 | 810 | 911 |

When the processor starts, it will load the first instruction and start executing:

Breakdown of the execution:

0: subleq 7, 6, 9

a = memory[0], which is 7

b = memory[1], which is 6

c = memory[2], which is 9

memory[b] = memory[b] - memory[a]

if memory[b] <= 0:

PC = c

else

PC += 1

in our case, on location 6 we have 3, and on 7 we have 1

so we will store 2 (the result of 3 - 1) at location 6

and since it is greather than 0, we will continue to the

next instruction.

3: subleq 8,8,0

a = memory[2], which is 8

b = memory[3], which is 8

c = memory[4], which is 0

memory[b] = memory[b] - memory[a]

if memory[b] <= 0:

PC = c

else

PC += 1

you will notice, that in locaiton 8 we have: 0

so 0 - 0 is 0, so we will jump to the 3rd parameter

of the instruciton, which is 0

9:

subleq 8, 8, 9

a = memory[9], which is 8

b = memory[10], which is 8

c = memory[11], which is 9

memory[b] = memory[b] - memory[a]

if memory[b] <= 0:

PC = c

else

PC += 1

and.. surprise, we are at location 9

so it will execute this instruction forever

It is a simple counter that counts from 3 to 0.

What it can do is only limited by our ability to program it. If we make it big enough, it can simmulate the weather on our planet, or, some people say, the universe. It is, what we call now, an universal computer.

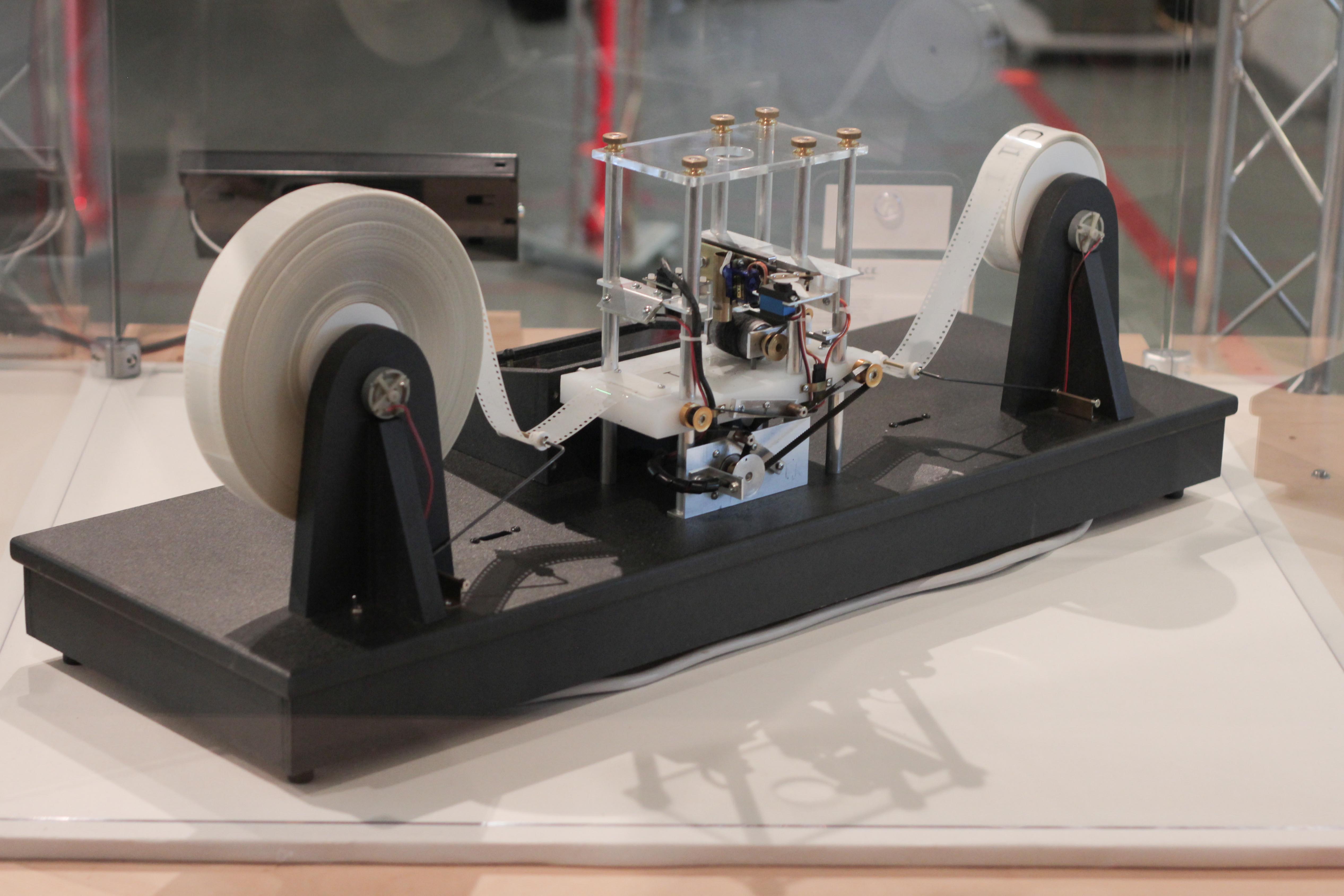

Alan Turing, in 1930s found the universal computing machine, now we call it a Turing Machine.

...an unlimited memory capacity obtained in the form of an infinite tape marked out into squares, on each of which a symbol could be printed. At any moment there is one symbol in the machine; it is called the scanned symbol. The machine can alter the scanned symbol, and its behavior is in part determined by that symbol, but the symbols on the tape elsewhere do not affect the behavior of the machine. However, the tape can be moved back and forth through the machine, this being one of the elementary operations of the machine. Any symbol on the tape may therefore eventually have an innings. -- Alan Turing 1948

What Turing has found is that any machine that has memory and can make choices based on said memory can compute any computable sequence. You see, being able to replace the whole memory at once, or being able to read individual bytes or bits of information is not important for the theoretical machine. Anything that can simulate the universal Turing machine can compute anything computable; we call this property Turing-completeness. The term "memory" is used a bit losely here, memory can be obfscure, like the memory of neural networks is not obvious to us, but there is still memory there.

We design our computers so that we can program them, and that means to be able to express our ideas in their language. Even this primitive SUBLEQ language is much easier for us to program than the simplest chemical computer. Again, possibly due to the way we use our memory, somehow our memory can recall information on demand, when you think of an apple, an apple will appear in your imagination. The same program can be written in infinitely many ways, in different languages, or for different computation machines, even though it might do the same thing, so we have to pick the one that works for us.

You saw how the grid of RAM cells looks, it is instant to access specific bytes form it, we just have to toggle a switch and with almost the speed of light we get the data. So it is not only natural to us, but also extremely practical to use addressing for our programs.

Alonzo Church, a titan, who at the same time as Turing, discovered another universal computer. Both of them made their machines, and even though they look nothing alike, each can simmulate the other. Church discovered that everything that can be computed can be expressed as transformation of symbols. I won't go into detail, just enough to leave you confused. It does not use memory in the same way; its memory is stored in recursion, and its choices are stored in selection.

Computation is far more general than the machines we built, don't be confused by the bits and bytes, ones and zeroes. Everything is the same, but, you must be able to talk to the machine, to make your program do what you want, so you must understand the machine in order to think like it and find a way to communicate with it.

Humans have 'theory of mind', I can pretend that I am you, and think what you would do, how would you feel, why are you doing the thing that you are doing. Proven by the famous 'Sally-Anne test": Sally puts her marble in the red box and goes outside. While she’s gone, Anne moves the marble to the blue box. When Sally comes back where would she look for the marble first? You could think what she would do, she of course might surprise you, and not look for the marble at all, and if she doesn't you could think of reasons why, maybe she hid because she hates it and never wants to see it again. This is theory of mind, you being to able to think what another human would do and why would they do it. Theory of Mind is in the fabric of our ability to communicate, interact and build complex societies. That is why human language is so different than machine language. Language for humans is not only communication mechanism, each symbol produced, modifies the writer themself, as well as the reader. What does that mean for a writer who writes for themselves? Human language is ever changing. Its purpose is to express subjective experience, emotion, intention, it has nuance and metaphor, and its meaning emerges from interpretation and introspection. It is ambigous and contextual by nature, one symbol can mean nothing and everything.

Programming language is very different, it is determinism, int a = 1 + 1, it

is completely unambigous, strict, it is more of an encoded set of instructions

than what we mean by "language".

Both human and programming languages have structure, grammar and vocabulary, and

this is in fact the formal definition of "a language", but you can see they are

in fact very different in the way the symbols are evaluated, due to the nature

of their evaluator. The purpose of a programming language is for humans to be

able to express their idea to the machine. Any computer can run all programs,

but the program for a chemical computer looks very different than a program for

a digital computer, e.g. the program a = 1 + 1, we could compile that into

instructions for both computers, but it could be that for the chemical computer

this is incredibly difficult task, could take 1 year to execute reliably, but in

the digital computer it takes 1 nanosecond. Our programming languages are bound

by the computer which will execute their program. In the same time programs can

live in some very abstract space, e.g. the expression x = x + 1 can work with

value of x so large that there are not enough electrons in the whole universe

to encode its value. But the language must be practical, it must make it as easy

as possible for the human to write the program, and for the computer to execute

said program.

Most programming languages try to ignore that our computers are what they are, of course, for noble goals: to write complex programs is beyond our abilities. We keep trying to create languages with emergent properties to save us from ourselves. Look at the average programmer and think how would they use it the language, will their program require more maintenence, will there be more bugs, can you replace the programmer easilly, is it productive, is it performant, and so on. Language designer have all kinds of inspirations. Sometimes they forget that the average programmer does not exist. Nothing average exists. If you were to make a chair, the perfect chair for me might be a torture device for you, so the chair designer have to compromise, because they want to sell chairs both to you and me. And we get an average chair, worse for both.

Understand how the digital computer remembers and how it thinks, will help you to have a 'theory of mind' when talking to it. This applies to any system you are interracting with, that is what understanding physics and math gives you, the ability to think like the universe. To ask questions: why is it moving, why is did it stop? When you save a file on your One Drive disk, then the you open the drive on another compuiter, and the file is gone, why is it gone? How could it be that things are the way they are? How do pixels work on your screen, or WiFi, how about the TV's remote control? You see how well you understand Sally, you can understand anything in the same way, if you think like it, examine its parts, and the part's interractions, empathize with it.

Many give up on understanding, some they confuse it with success, their goal is to get a good job, or impress their teacher, parents or peers, or even themselves, others think they are not good enough, others think they have gained mastery, "there is nothing more to understand" they say.

Fools.

To understand one thing means to understand everything. Hundred lifetimes are not enough.

Be careful, as Jung says, There is only one way and that is your way.

There is only one way and that is your way; there is only one salvation and that is your salvation. Why are you looking around for help? Do you believe that help will come from outside? What is to come is created in you and from you. Hence look into yourself. Do not compare, do not measure. No other way is like yours. All other ways deceive and tempt you. You must fulfill the way that is in you.

Oh, that all men and all their ways become strange to you! Thus might you find them again within yourself and recognize their ways. But what weakness! What doubt! What fear! You will not bear going your way. You always want to have at least one foot on paths not your own to avoid the great solitude! So that maternal comfort is always with you! So that someone acknowledges you, recognizes you, bestows trust in you, comforts you, encourages you. So that someone pulls you over onto their path, where you stray from yourself and where it is easier for you to set yourself aside. As if you were not yourself! Who should accomplish your deeds? Who should carry your virtues and your vices? You do not come to an end with your life, and the dead will besiege you terribly to live your unlived life. Everything must be fulfilled. Time is of the essence, so why do you want to pile up the lived and let the unlived rot?

-- Carl Jung, Liber Secundus

I have confused you enough, but will leave you with one more riddle:

I am what I read plus what I write.

Before I began, I read nothing.

When I began, I wrote "I am what I read plus what I write."

This language program, creates itself, defines itself, and its output is itself. How do you think it uses memory?

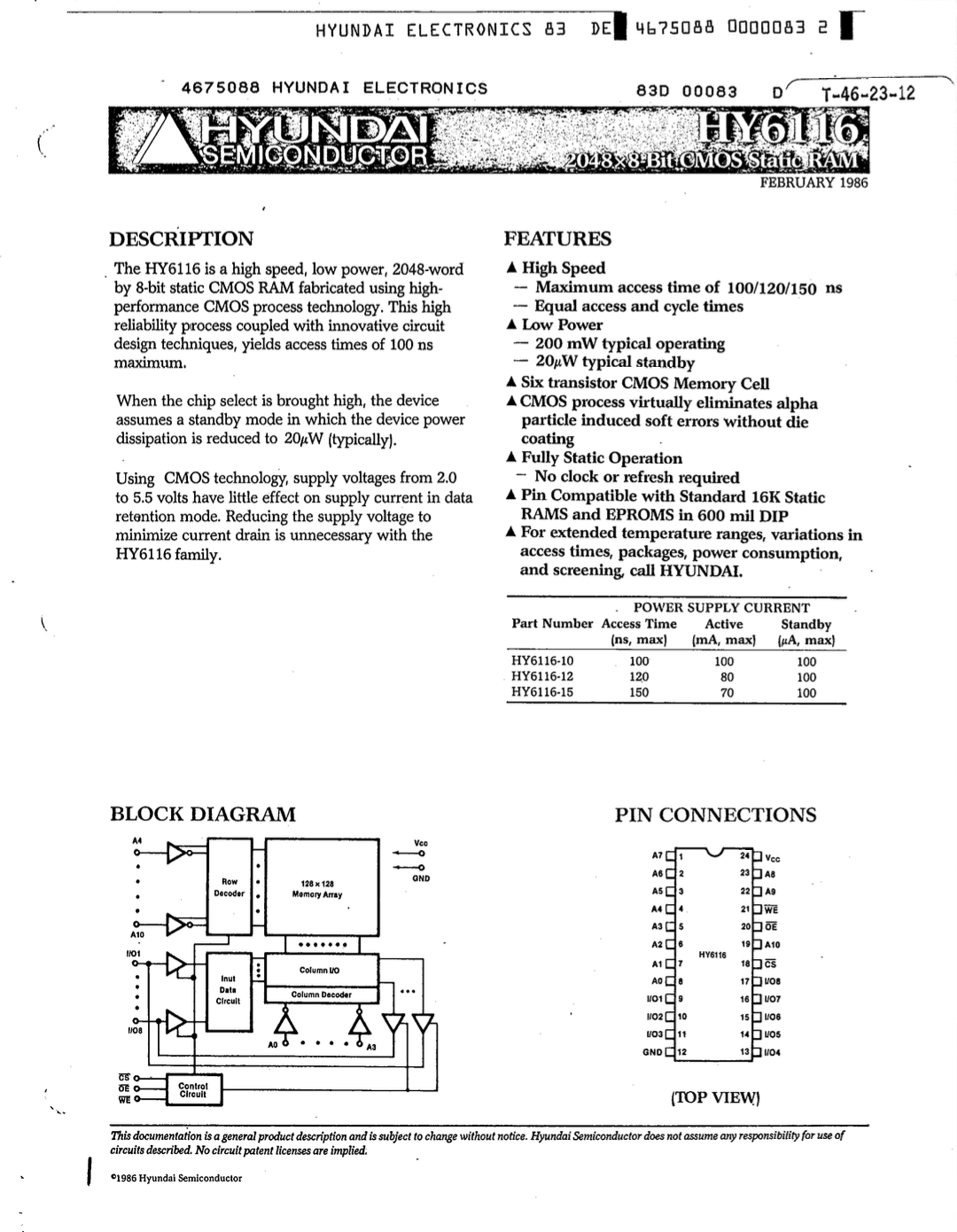

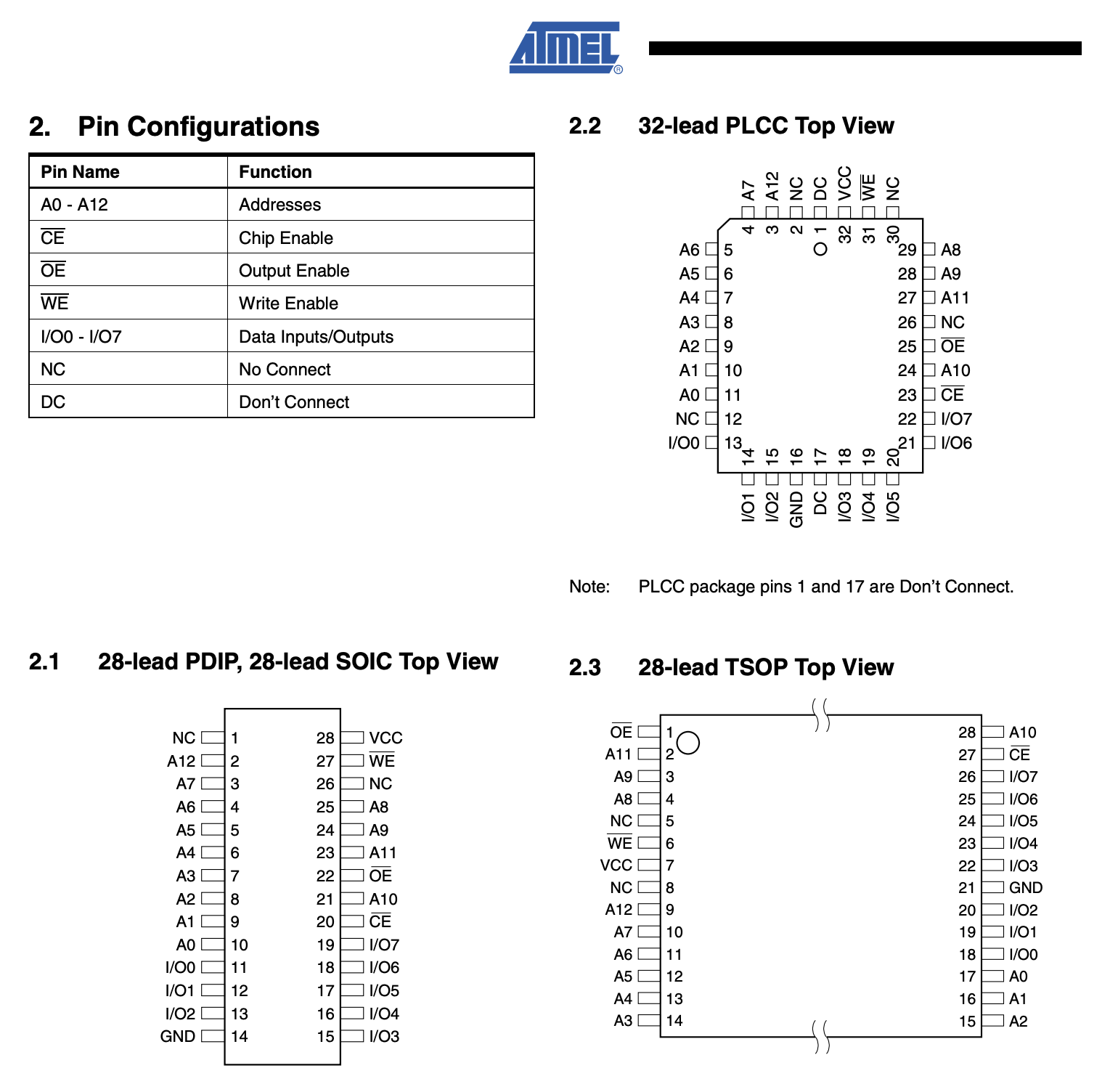

Going back to the wires. Lets have a look of how SRAM actually looks, this is the HY-6116 2048 x 8 bit SRAM chip

This chip is quite old, from 1986, and it has only 2048 bytes of memory, but we will use it for education purposes.

When you buy a chip you get a datasheet where you can see its specifications, and how it works.

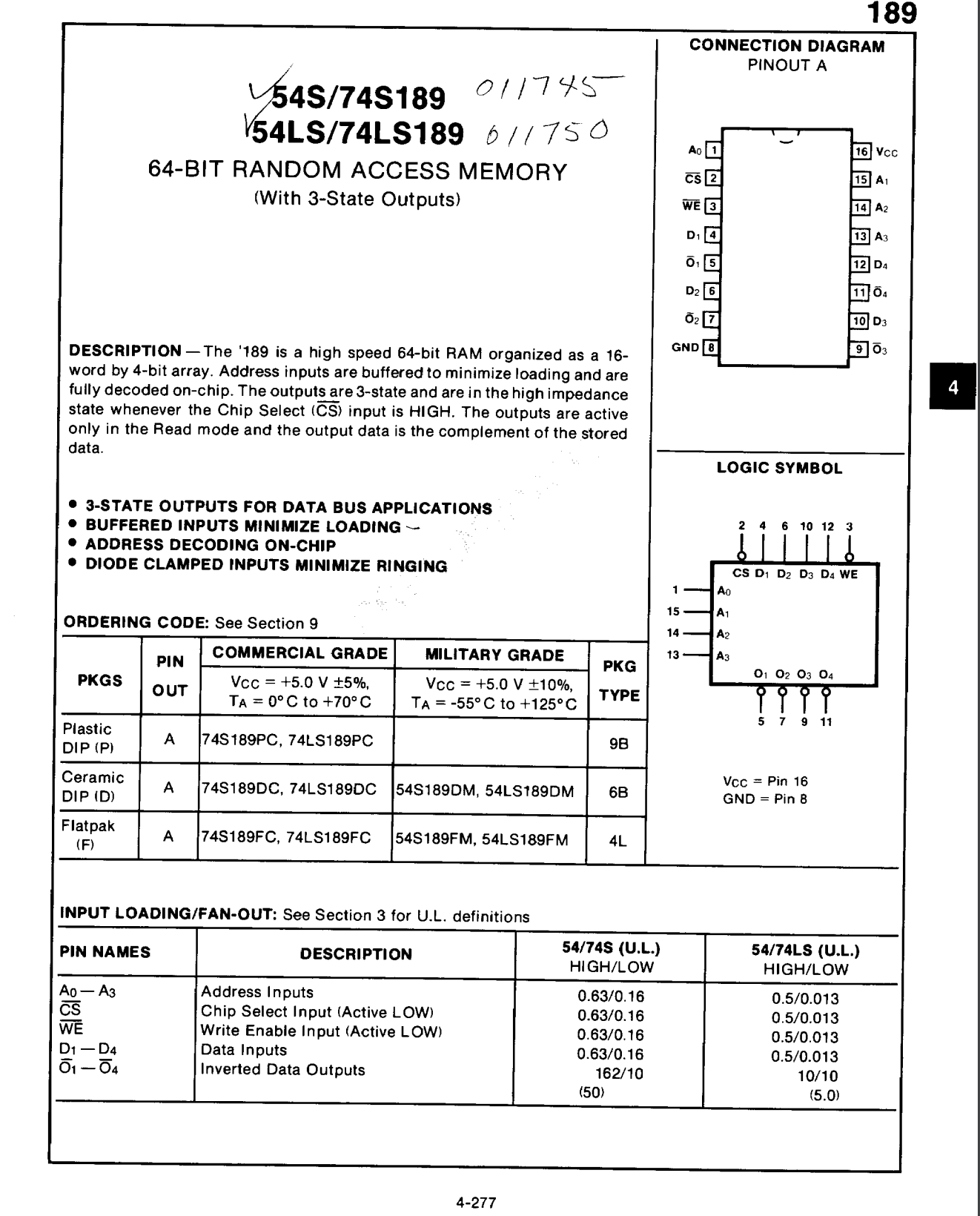

In the first page of the datasheet you can spot some quite familiar words, you can see the row decoder, the column decoder, you can see the grid of 128 x 128 cells. You can see the row decoder has 7 wires, from A4 to A7, so we can represent any number from 0 to 127, but strangely the column decoder takes only 4 wires coming in, A0, A1, A2, A3, so it can represent only 16 columns, from 0 to 15. Which gives us 128 * 16 = 2048 locations, but the grid has 16384 cells. This is because we always read or write one byte at a time, we are not addressing each bit, but each 8 bits.

The 8 IO lines are the input and output for the data. We either read a byte or write a byte using them.

There are few more wires that are important, CS, WE, OE, the bars on top of them mean "active low", so when it is connected to ground it is active, and when it has voltage it is inactive.

- CS: chip select - when enabled the chip is active

- WE: write enable - using the IO lines are we reading or writing, that tells the column decoder if it should enable the sensors or the bit lines to the IO lines

- OE: output enable - for reading, we want to tell the chip WHEN to put the data

on the IO lines, putting the data means setting them

HIGHorLOW, so in order to read, we disable WE, and at the very moment that OE is active, the chip will put the data on the lines. Once OE is inactive the sensors are disconnected from the IO lines.

For our computer we will use a smaller chip, but it has similar pins, and it is way smaller, only 16 bytes, but it will work for us.

One important thing to notice is that the output of this chip is inverted, so if we store 1, in a location, the output will be 0, and if we store 0 the output will be 1, which means we will have to use a NOT gate to invert the outputs to use them properly.

An element which stimulates itself will hold a stimulus indefinitely.

-- John von Neumann

Central Processing Unit: CPU; The Processor.

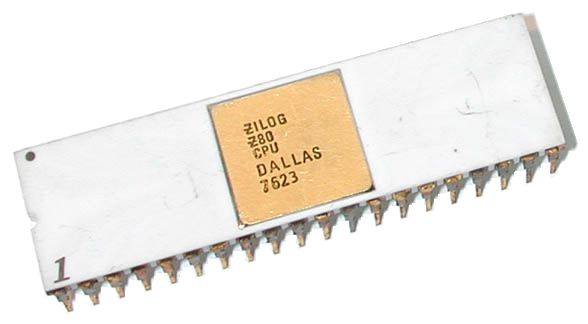

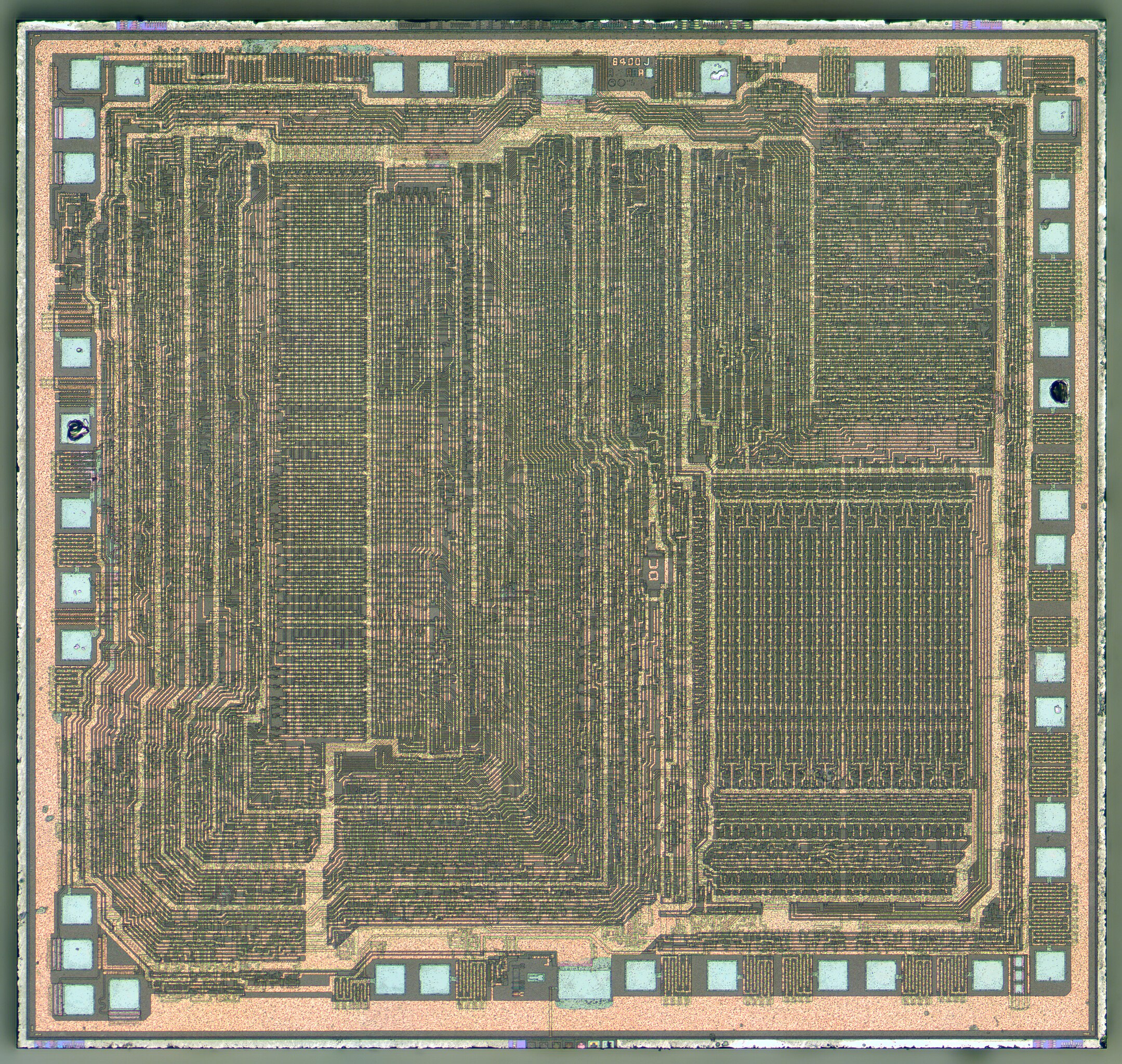

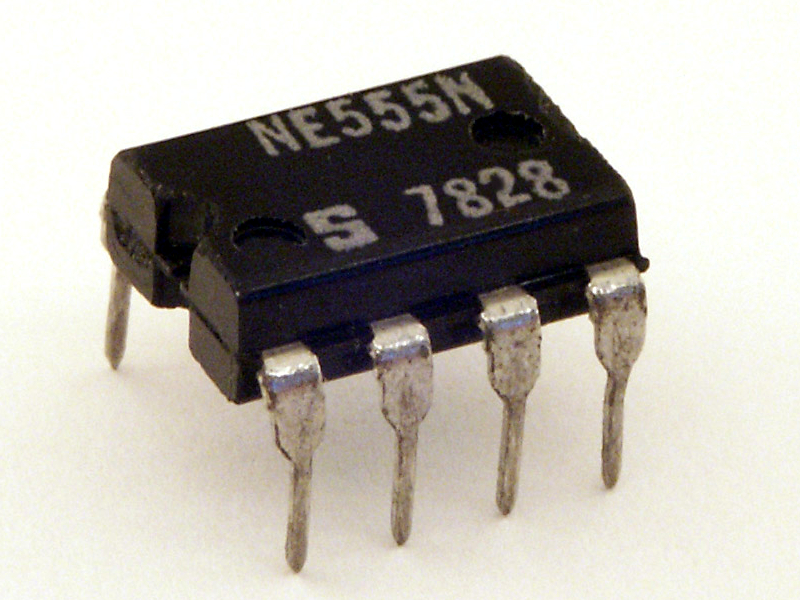

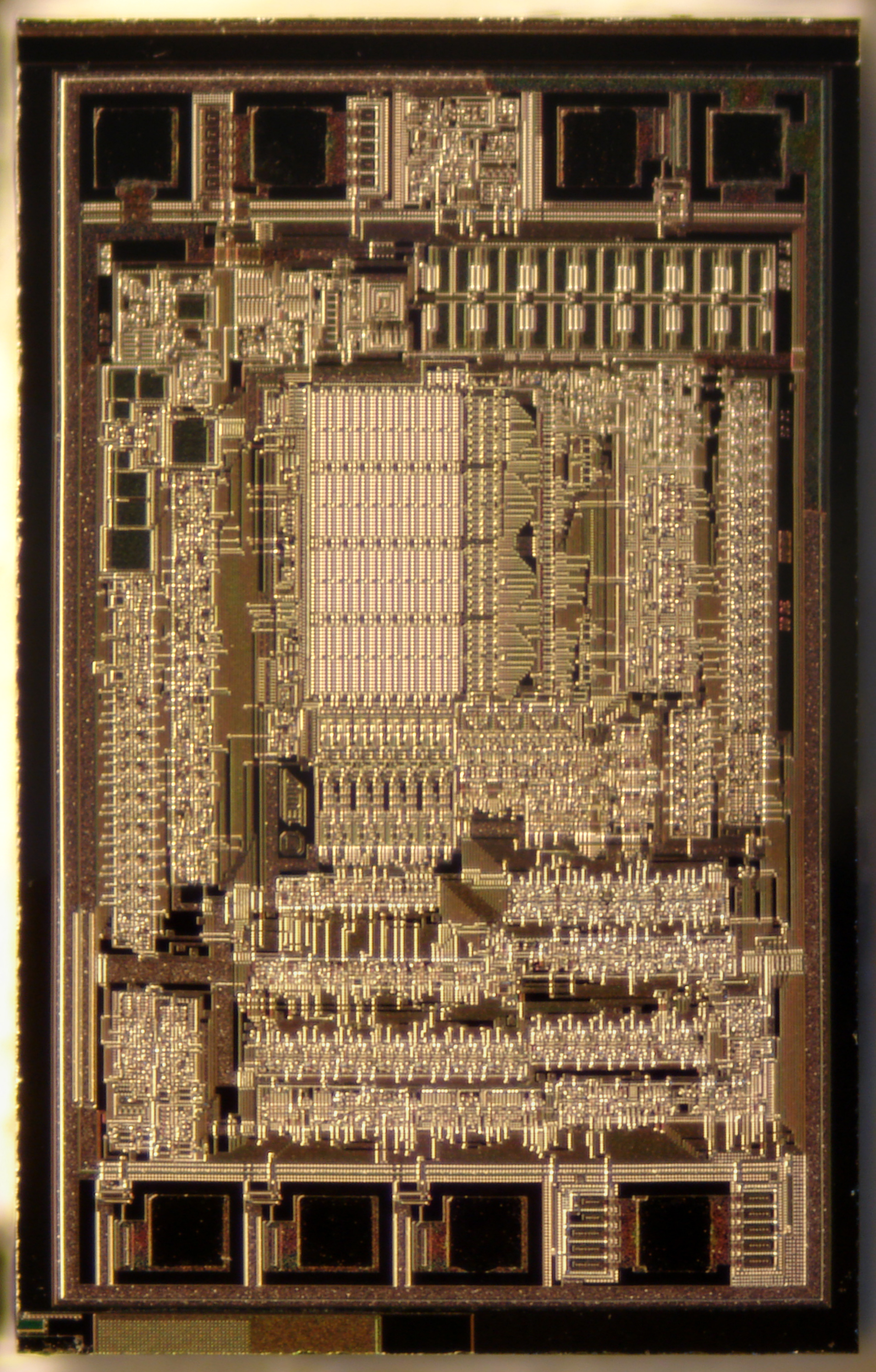

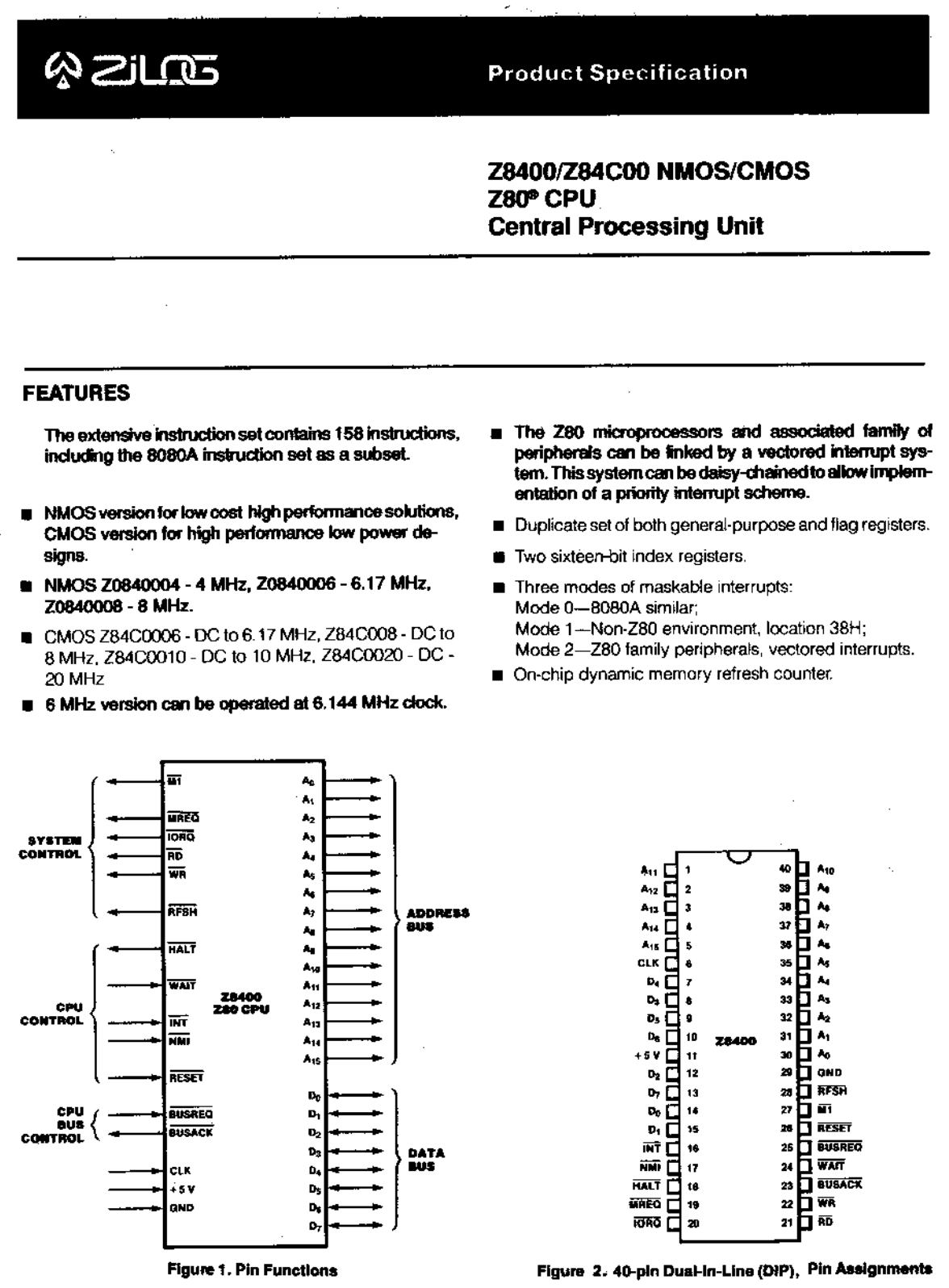

This is the Zilog Z80 Micro Processor, released in 1976 and discontinued in 2024.

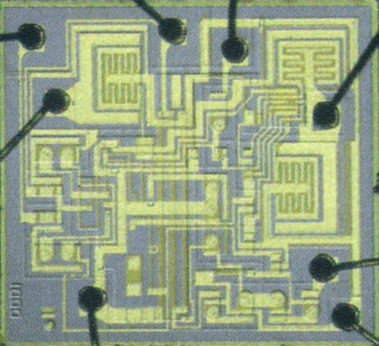

The actual chip is 0.35cm x 0.35cm in size, and the rest of the stuff you see is just so that we can connect wires to it. When you remove the protective layers on top and use a scanning electron microscope, you can see the actual transistors inside.

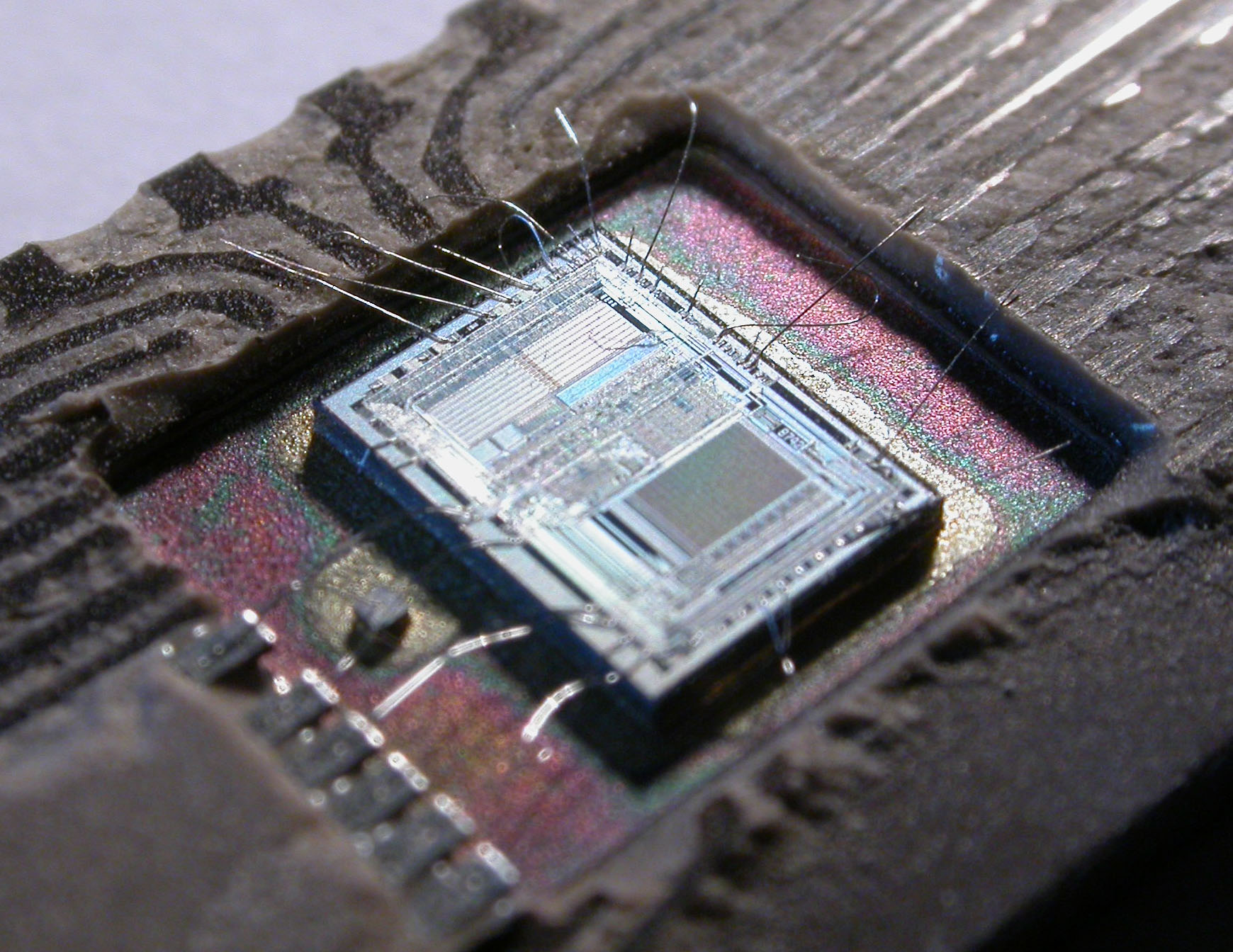

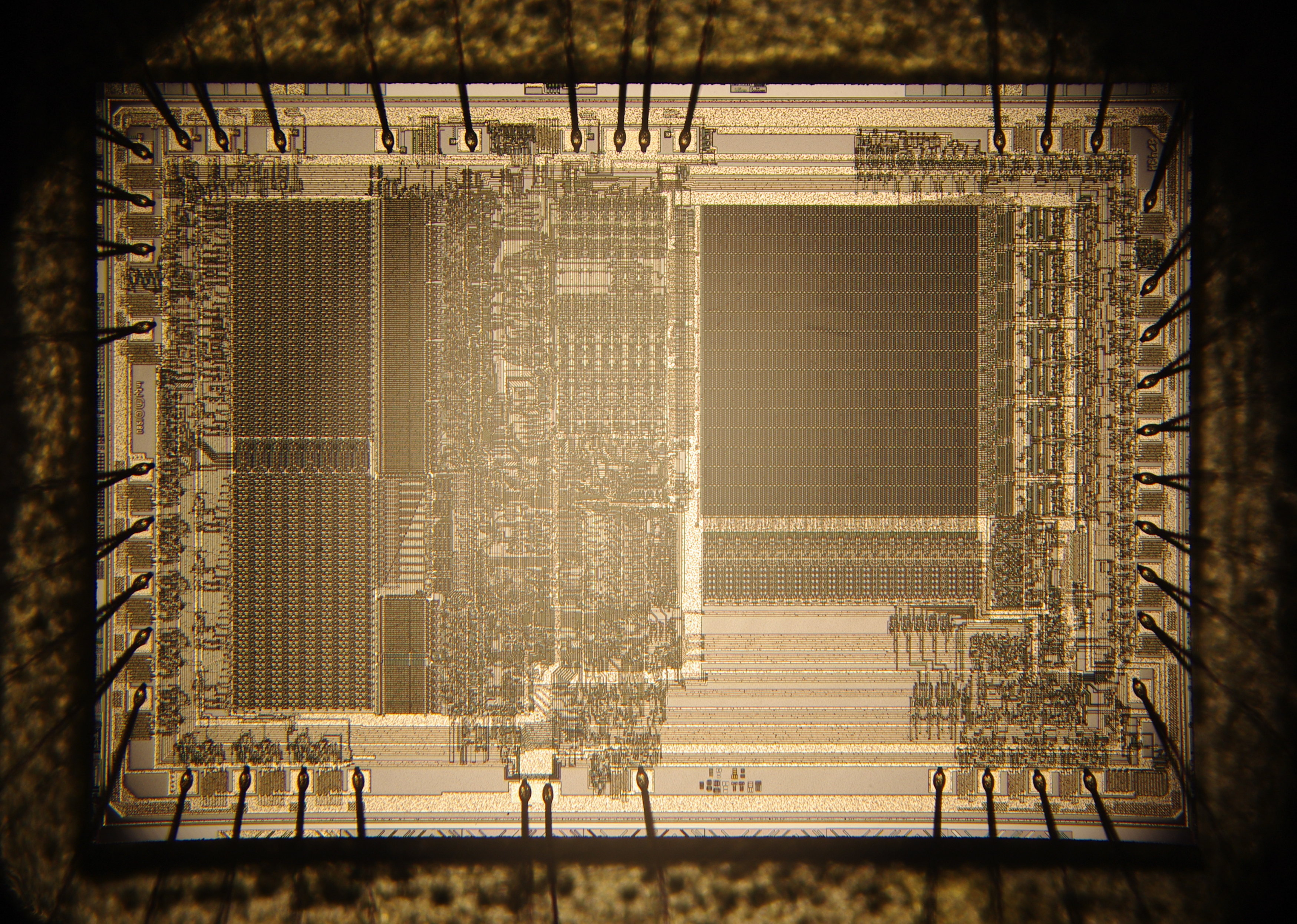

You see the legs on the outside are connected to the big square pads on the chip; there are 40 pads and 40 legs. Check out this picture with the wires sticking out.

This image is from the Intel 8742 microcontroller, but the idea is the same. You can see the wires sticking out from the pads; they will be connected to the legs, and then we can connect them to the rest of the system. This is again the Intel 8742 under a microscope, but you can also see the wires connected.

Before we go further, we will design a hypothetical processor so that you can understand the fundamental parts. Again, everything is about infinite feedback loops, but this time we don't use them to store bits of information, but to execute transformations.

The processor has 4 main components:

- Clock: its heart; every tick it executes the next transformation

- Registers: its working memory; they are just flipflops or SRAM cells

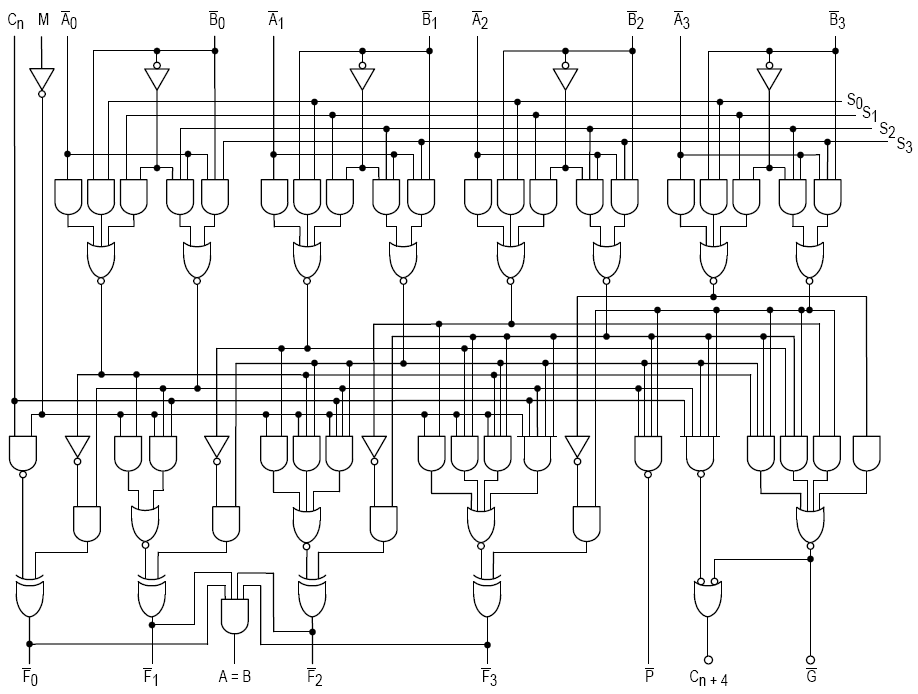

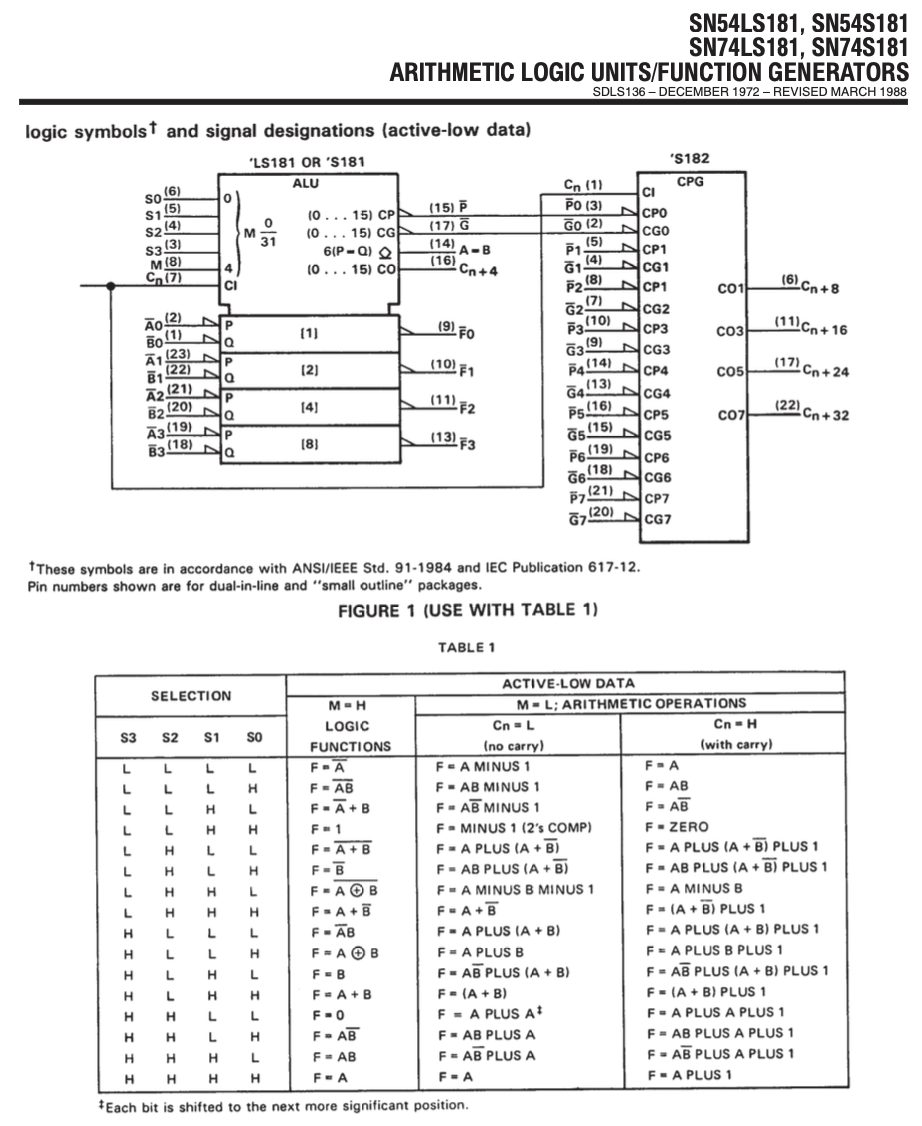

- ALU: Arithmetic unit, calculator; can add, subtract, does basic logic (AND, OR, etc.)

- Control Unit: reads instructions, decodes them and controls the other parts to execute them, and they control the control unit.

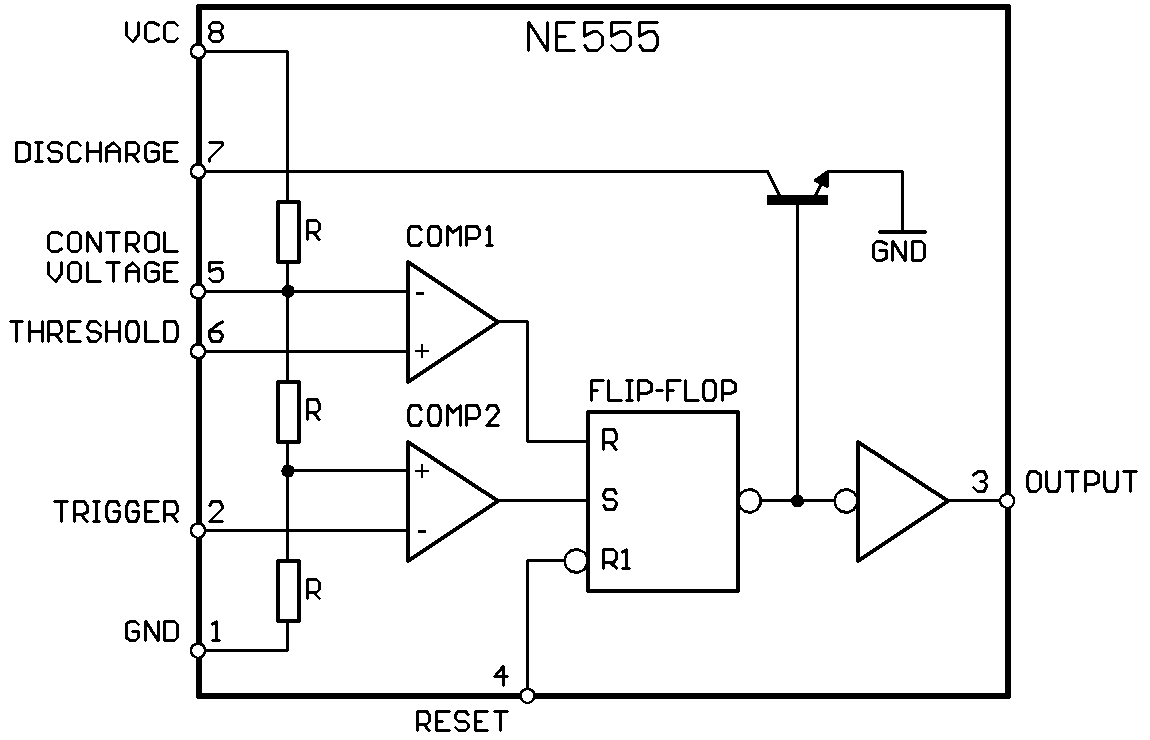

Clock

The clock is a circuit that oscillates at a particular frequency; its purpose is to

turn its output wire HIGH or LOW periodically. A very famous example is the 555

Timer. In its core, you guessed it, an infinite loop.

There are other kinds of timers that use oscillating crystals that can oscillate in the MHz range, and then there are circuits that are frequency multipliers, so MHz can turn into GHz. For reference, most modern CPUs are operating with clocks in the GHz range. The frequency multipliers usually are Phase-locked loops or PLLs. Names are not important, ideas are important. The 555 timer can achieve stable frequency from 0.1 Hz to 500 kHz.

The clock circuit can be outside or inside the CPU itself. Z80 has it outside, meaning that one of Z80's pins is connected to the output of the clock circuit.

The signal clock looks like this:

_____ _____ _____

CLK |_____| |_____| |_____

It is really just a heartbeat, HIGH, LOW, HIGH, LOW... 1 0 1 0 1 0.

In the book 'But How Do It Know?' by J. Clark Scott, and in The Art of Electronics, there is an example of a very simple pulse generator circuit.

Imagine a NOT gate: HIGH comes in, LOW comes out, but now we also connect

its output to its input, so just as 1 comes out, it feeds into its input and

very shortly after, it will output 0, but then 0 will be its input, so it will

output 1, and so on. In this case the pulse will be very very short, but you get

the idea.

When you buy a computer it says 'the CPU is at 3ghz' this is what they mean, it beats 3,000,000,000 times per second. The speed of light is about 300,000,000 meters per second, in various materials depending on their structure electrons move at speeds between 50 and 99% of the speed of light, so lets say in your computer they move at 150,000,000 m/s. That means that in 1 nanosecond an electron can travel about 15cm. Your computer ticks about 3 times per nanosecond, that means that in 1 clock pulse an electro can travel 5cm. Open up your computer and see, take a ruler and measure the distance between the RAM and the CPU, between the GPU and the RAM, and think about it.

AMD's Ryzen 7 can reach up to 5.6GHz, and and some of Intel's i9 can reach 5.8GHz. Imagine, 6 beats per nanosecond, the electrons can travel barely 3cm. Thats just about the width of 2 of your fingers.

This is how far we have gotten.

Why do we need a clock? Why can't things just be continous?

For our digital computers clocks make things easier to design and to make, because the clock allows us to orchestrate many components, and physically each one of them have some error, also you see how electrons will reach one before the other, just a tiny tiny bit, but that is enough to cause confusion if we want to disable one component and enable the other in the "same" time. There are clockless processors, but I have never programmed one. One example is the AMULET processor.

But I think the bigger question is: Why is it so natural for us to break things into steps, enable this, disable that..?

How would you sort the rings on this baby toy? You will immediately make a plan, first you would take all pieces out, then you look for the biggest one, then you place it first, then look for the second biggest. You can't do it all in the same time, cant even do it 2 at a time, and you have 2 hands.

Even as I am writing this, I can imagine a machine with many levers I pull one and this happens, then pull the other and that happens, then the next one.. I can control the machine. I can think like it. It is much harder to me to think like water.

Look at a wave.

It scares me and excites me in the same time, my thoughts run out. The interference between crests and troughs, how they collapse on themselves, how they interract with each other. Just look at it.

Have you seen boiling water? What do you think the bubbles are made of? Do you think its air? It is water vapour, water molecules so excited that they create a bubble, thrashing agains the rest of the water, the bubble has no air, it is just vacuum and water molecules 3-4 nanometers apart. But what happens as the bubble goes up, from the bottom of the pan?. It is an amazing question, first why does it even want to go up? Why doesn't all the water become gas in the same time? How come the bubbles from at the bottom when they are under the pressure of all the water above, they must hit other molecules so hard to break free.